Preview text:

SYNCHRONIZATION Dr. Quang Duc Tran

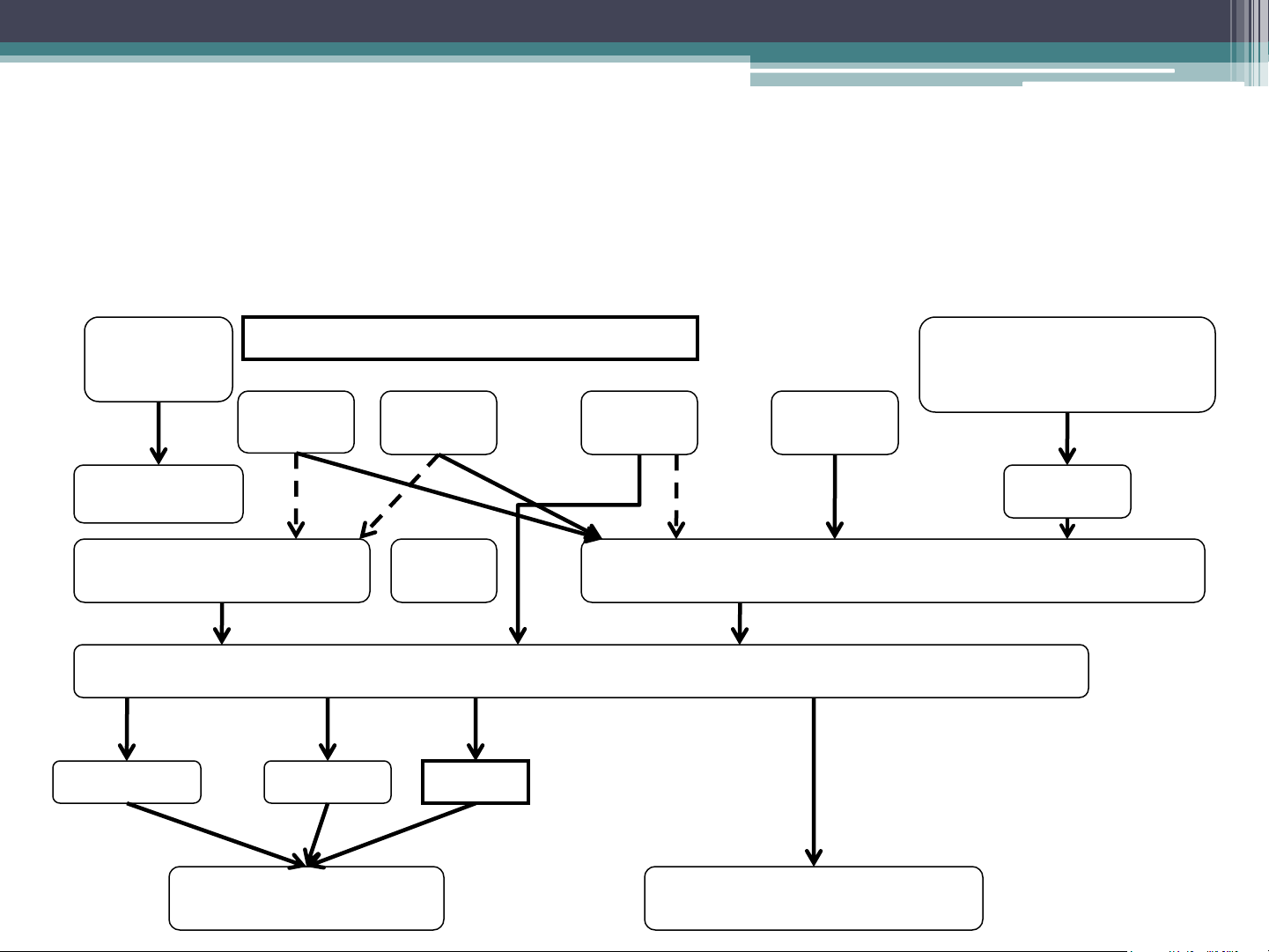

Multimedia Protocol Stack Synchronization Service Media encaps DASH (H.264, MPEG-4) SIP RTSP RSVP RTCP RTP HTTP TCP DCCP UDP IP Version 4, IP Version 6 AAL3/4 AAL5 MPLS ATM/Fiber Optics Ethernet/WIFI Synchronization Issues • Content Relations

▫ It defines a dependency of media objects on some data. An

example of a content relation is two graphics that are based

on the same data but show different interpretations of the data. • Spatial Relations

▫ It defines the space used for the presentation of a media

object on an output device at a certain point of time in a multimedia presentation. • Temporal Relations

▫ It defines the temporal dependencies between media

objects. They are of interest whenever time-dependent media objects exist.

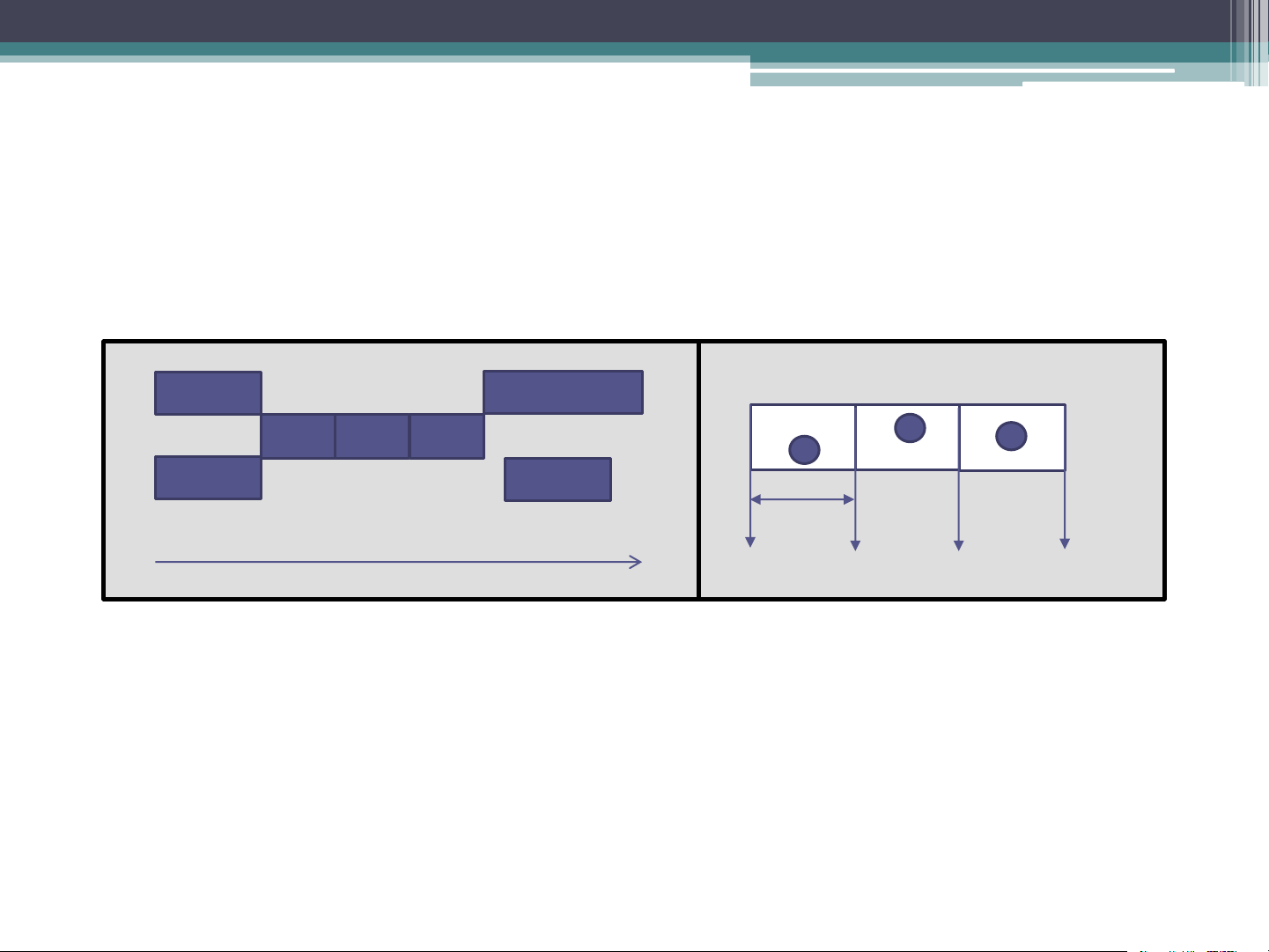

Intra- and Inter-Object Synchronization

• Intra-Object Synchronization

▫ It refers to the time relation between various presentation

units of one time-dependent media object. An example is

the time relation between the single frames of a video

sequence. For a video with a rate of 25 frames per second,

each of the frames must be displayed for 40 ms.

• Inter-Object Synchronization

▫ It refers to the synchronization between media objects. An

example is a multimedia synchronization that starts with an

audio/video sequence, followed by several pictures and an

animation that is commented by an audio sequence.

Intra- and Inter-Object Synchronization Audio Animation P1 P2 P3 Video Audio 40 ms

Inter-object synchronization example that Intra-object synchronization

shows temporal relations in a multimedia between frames of a video

presentation including audio, video,

sequence showing a jumping ball.

animation, and picture objects.

Live and Synthetic Synchronization • Live Synchronization

▫ The goal of the synchronization is to exactly reproduce at a

presentation the temporal relations as they existed during

the capturing process. An example is a video conference for person-to-person discussion, which demands lip

synchronization of the audio and video.

▫ Example: Two persons located at different sites of a

company discuss a new product. They use video conference

application, share a blackboard and they point with their

mouse pointers to details of the different parts and discuss some issues.

Live and Synthetic Synchronization • Synthetic Synchronization

▫ The temporal relations are artificially specified and

assigned to media objects that were created independently

of each other. For example, 4 audio messages are recorded

as a part of engine in animation. The time relations

between animation and matching audio sequences are specified.

▫ Common operations are “Presenting media streams in

parallel”, “Presenting media streams one after the other”,

“Presenting media stream independent of each other”. Local Data Unit (LDU)

• Time-dependent media objects consist of a sequence of

information units, known as Local Data Units (LDU’s)

• For digital video, often the frames are selected as LDU’s. For a

video with 30 frames per second, each LDU is a closed LDU with a duration of 1/30 s.

• Often LDU’s are selected that block the samples into units of a

fixed duration. An audio stream has very small physical units.

Hence, LDU’s are formed comprising 512 samples.

• Duration of LDU can be selected by the user. LDU’s may vary in duration.

Presentation Requirements • Lip Synchronization

▫ It refers to the temporal relationship between an audio and

video stream for the case of human speaking.

The “in sync” region spans a skew of +/-80 ms.

The “out of sync” area spans a skew of +/-160 ms. • Pointer Synchronization

▫ The speakers use a pointer to point out individual elements

of the graphics which may have been relevant to the discussion taking place.

The “in sync” region spans a skew of +750/-500 ms.

The “out of sync” area spans a skew of +1250/-1000 ms.

Presentation Requirements • Lip Synchronization

▫ It refers to the temporal relationship between an audio and

video stream for the particular case of human speaking.

The “in sync” region spans a skew of +/-80 ms.

The “out of sync” area spans a skew of +/-160 ms. • Pointer Synchronization

▫ The speakers use a pointer to point out individual elements

of the graphics which may have been relevant to the discussion taking place.

The “in sync” region spans a skew of +750/-500 ms.

The “out of sync” area spans a skew of +1250/-1000 ms. Reference Model

The model of Gerold Blakowski and Ralf Multimedia Application

Steinmetz, “A Media Synchronization

Survey: Reference Model, Specification,

and Case Studies, ” IEEE Journal on High Specification Layer

Selected Areas in Communications, vol. 14, no. 1, Jan. 1996. Object Layer onitcra Stream Layer t Abs Media Layer Low

• Each layer implements synchronization mechanisms, which are

provided by an appropriate interface. Each interface defines

services, offering the user a means to define his requirements. Each

interface can be used by an application or by the next higher layer. Media Layer

• An application operates on a single continuous media stream,

which is treated as a sequence of Local Data Units. Using this

layer, the application is responsible for the intra-stream

synchronization by using flow-control mechanisms.

• Assumption at this level: Device independence

• Supported operations at this level: ▫ Read(devicehandle, LDU) ▫ Write(devicehandle, LDU Media Layer

window = open(“video device”); movie = open(“video file”) while (not EOF(movie)) { read(movie, &LDU) if (LDU.time == 20) printf(“subtible 1”) if (LDU.time == 26) printf(“subtible 2”) write(window, LDU); } close(window); close(movie); Stream Layer

• It operates on continuous media streams as well as on groups

of media streams. In a group, all streams are presented in parallel by using mechanisms for inter-stream synchronization. • Support operations

▫ Start(stream), Stop(stream), Create-group(list of streams) ▫ Start(group), Stop(group)

▫ Setcuepoint(stream/group, at, event) Stream Layer open digitalvideo alias ex //Create video descriptor load ex video.avs

//Assign file to video descriptor

setcuepoint ex at 20 return 1 //Define event 1 for subtitle 1

setcuepoint ex at 26 return 2 //Define event 2 for subtitle 2 setcuepoint ex on //Activate cuepoint events play ex //Start playing switch readevent() {

case 1: display(“subtitle 1”) //If event 1 show subtitle 1

case 2: display(“subtitle 2”) //If event 2 show subtitle 2 } Object Layer

• It operates on all types of media and hides the differences

between time-independent and time-dependent media. To the

application, it offers a complete and synchronized media. This

layer takes a synchronization specification as input and is

responsible for the correct schedule of the overall presentation.

• It does not handle intra-stream and inter-stream synchronization

Object Level – MHEG standard

• MHEG5 is part of a set of international standards relating to

the presentation of multimedia information. It is license-free

and public standard for interactive TV middleware.

• MHEG5 is an object-based declarative programming language

can be used to describe a presentation of text, images and videos.

Object Level – MHEG standard Composite { start-up link viewer start-up viewer-list Viewer1: reference to Component1 Viewer2: reference to Component2 Viewer3: reference to Component3 Component1

reference to content “movie.avs”

Object Level – MHEG standard Component1

reference to content “movie.avs” Component2

reference to content “Subtitle 1” Component3

reference to content “Subtitle 2” Link1

“when timestone status of Viewer1 become 20 then start Viewer2” Link1

“when timestone status of Viewer1 become 26 then start Viewer3” }

Synchronization Specification

• Synchronization specification describes all temporal

dependencies of the included object in the multimedia object.

It should be comprised of inter- and intra-object

synchronization for the media objects of the presentation and

QoS for inter- and intra-object synchronization.

• In the case of live synchronization, the temporal relations are

implicitly defined during capturing. QoS requirements are

defined before starting the capture.

• In the case of synthetic synchronization, the specification must be created explicitly.