Preview text:

lOMoARcPSD| 36067889 Python Project

HỌC VIỆN CÔNG NGHỆ BƯU CHÍNH VIỄN THÔNG lOMoARcPSD| 36067889 Python Project Table of Contents I. Introduc 琀椀

on..................................................................................................................3 A.

Overview of HandTracking, Mediapipe,

OpenCv......................................................3 B.

Importance of hand tracking in computer

drivers......................................................3 C.

Scope of study......................................................................................................3 D.

Objective of the report..........................................................................................4 II. Ge 琀 ng started with

HandTracking...................................................................................4 A. Setting up The

Hardware.........................................................................................4 B. Installing Necessary

Software..................................................................................4 C.

Understand HandTracking Technologies..............................................................4 III.

HandTracking Applica 琀椀 on in Computer

Driver...............................................................7 A. Gesture-based

Control.............................................................................................7 IV. Implemen 琀椀 ng

HandTracking........................................................................................8 A.

Choosing the Right HandTracking

Hardware...........................................................8 B.

Selecting the Appropriate Software Development Kit

(SDK)....................................8 C.

Configuring and Calibrating Hand Tracking Systems............................................9 V. Tes 琀椀 ng and

Debugging....................................................................................................9 A. Testing Hand Tracking

Performance........................................................................9 B. Debugging Common

Issues.....................................................................................9 C.

Performance Optimization....................................................................................9 VI.

User Experience Design.............................................................................................10 A. Design Principles for Hand

Tracking......................................................................10 lOMoARcPSD| 36067889 Python Project B.

User-Centered Design for Computer

Drivers..........................................................10 C.

Feedback Mechanisms.......................................................................................10 VII.

Case Study...............................................................................................................11 -

Features:.....................................................................................................................12 VIII.

Future Trends and Challenges....................................................................................15

A. Emerging Technologies in Hand Tracking.....................................................................15 C.

Scalability and Integration Challenges................................................................16 IX.

Conclusion...............................................................................................................16

A. Key Takeaways......................................................................................................16

B. The Future of Hand Tracking in Computer

Drivers............................................................17 I. Introduc 琀椀 on

A. Overview of HandTracking, Mediapipe, OpenCv 1. What is HandTraking?

- Hand tracking or hand gesture recogni 琀椀 on is a set of

techniques based on vision-based gesture recogni 琀椀 on for

human-computer interac 琀椀 on. The hand tracking feature allows

you to use natural hand movements to control, move, hold and

touch subjects without using bulky controllers 2. Mediapipe

- MediaPipe is a Framework for building machine learning pipelines

for processing 琀椀 me-series data like video, audio, etc. This

crosspla 琀昀 orm Framework works on Desktop/Server, Android,

iOS, and embedded devices like Raspberry Pi and Jetson Nano. 3. OpenCV

- OpenCV is the huge open-source library for the computer vision,

machine learning, and image processing and now it plays a major

role in real-琀椀 me opera 琀椀 on which is very important in

today’s systems. By using it, one can process images and videos to

iden 琀椀 fy objects, faces, or even handwri 琀椀 ng of a human.

When it integrated with various libraries, such as NumPy, python is

capable of processing the OpenCV array structure for analysis. To

Iden 琀椀 fy image pa 琀琀 ern and its various features we use lOMoARcPSD| 36067889 Python Project

vector space and perform mathema 琀椀 cal opera 琀椀 ons on these features.

B. Importance of hand tracking in computer drivers

- In modern 琀椀 mes, the rapid development of computer

technology and human-machine interfaces is changing the way we

interact with computers. In this context, HandTracking, a technology

that uses computers to track and understand users' hand gestures

and ac 琀椀 ons, has become extremely important. This is a

technology that helps computers understand a user's body language

and opens up signi 昀椀 cant opportuni 琀椀 es to improve

computer interfaces and machine interac 琀椀 ons.

- HandTracking is not only an interes 琀椀 ng technology but also has

wide applica 琀椀 ons, especially in the 昀椀 eld of computer

control. With the development of ar 琀椀昀椀 cial intelligence (AI)

and computer vision, HandTracking can help create computer

drivers based on hand gestures and ac 琀椀 ons, helping users

interact with computers automa 琀椀 cally . more natural and e 昀

昀 ec 琀椀 ve. This has the poten 琀椀 al to help reduce reliance on

tradi 琀椀 onal peripherals such as mice and keyboards, while

improving u 琀椀 lity and performance in everyday compu 琀椀 ng tasks. C. Scope of study

- The scope of this research focuses on the use of HandTracking

technology, speci 昀椀 cally using the Mediapipe and OpenCV

libraries, to simulate computer opera 琀椀 ons based on user

gestures. The research will focus on building a prac 琀椀 cal applica

琀椀 on to illustrate the poten 琀椀 al and applica 琀椀 on of

HandTracking to improve user engagement.

- The scope of the project includes the development and growth of a

simple simula 琀椀 on applica 琀椀 on, capable of recognizing and

understanding hand gestures, and then performing corresponding

ac 琀椀 ons on the computer. The project will focus on processing

and analyzing data from webcams or vision scanning devices to iden lOMoARcPSD| 36067889 Python Project

琀椀 fy voters in their hands and then perform ac 琀椀 vi 琀椀 es

such as moving the cursor, pressing bu 琀琀 ons or performing

other opera 琀椀 ons. interact on screen.

D. Objec 琀椀 ve of the report

- The objec 琀椀 ve of this report is to present the research,

development, and implementa 琀椀 on of a simula 琀椀 on applica

琀椀 on that uses HandTracking to simulate computer opera 琀椀

ons. The report will introduce the technologies, tools and methods

used in the project, along with the results achieved.

- Speci 昀椀 cally, the report's objec 琀椀 ves include:

a. Presents an overview of HandTracking and related technologies.

b. Describe the process of developing and deploying simula 琀椀 on applica 琀椀 ons.

c. Demonstrate how this applica 琀椀 on can improve server

interoperability in a real-world environment.

d. Analyze the formulas and limita 琀椀 ons in using HandTracking for this goal.

e. Provide poten 琀椀 al future development direc 琀椀 ons and

project expansion applica 琀椀 ons.

This report will provide an overview of the poten 琀椀 al of HandTracking in the 昀

椀 eld of human-robot interac 琀椀 on and is a 昀椀 rst step in exploring exci 琀椀

ng applica 琀椀 ons of this technology.

II. Ge 琀 ng started with HandTracking A. Se 琀 ng up The Hardware - Hardware Requirements:

A computer with a minimum con 昀椀 gura 琀椀 on of 8GB of

ram, a Core i5 processor and a graphics card good enough for image

processing. The device must have a webcam lOMoARcPSD| 36067889 Python Project

One hand sensor (not necessary) to keep your hand steady during opera 琀椀 on

B. Installing Necessary So 昀琀 ware - Visual Studio Code( PyCham)

- OpenCV, Mediapipe, Numpy, ComTypes, PyCaw,… - Python interpreter

C. Understand HandTracking Technologies

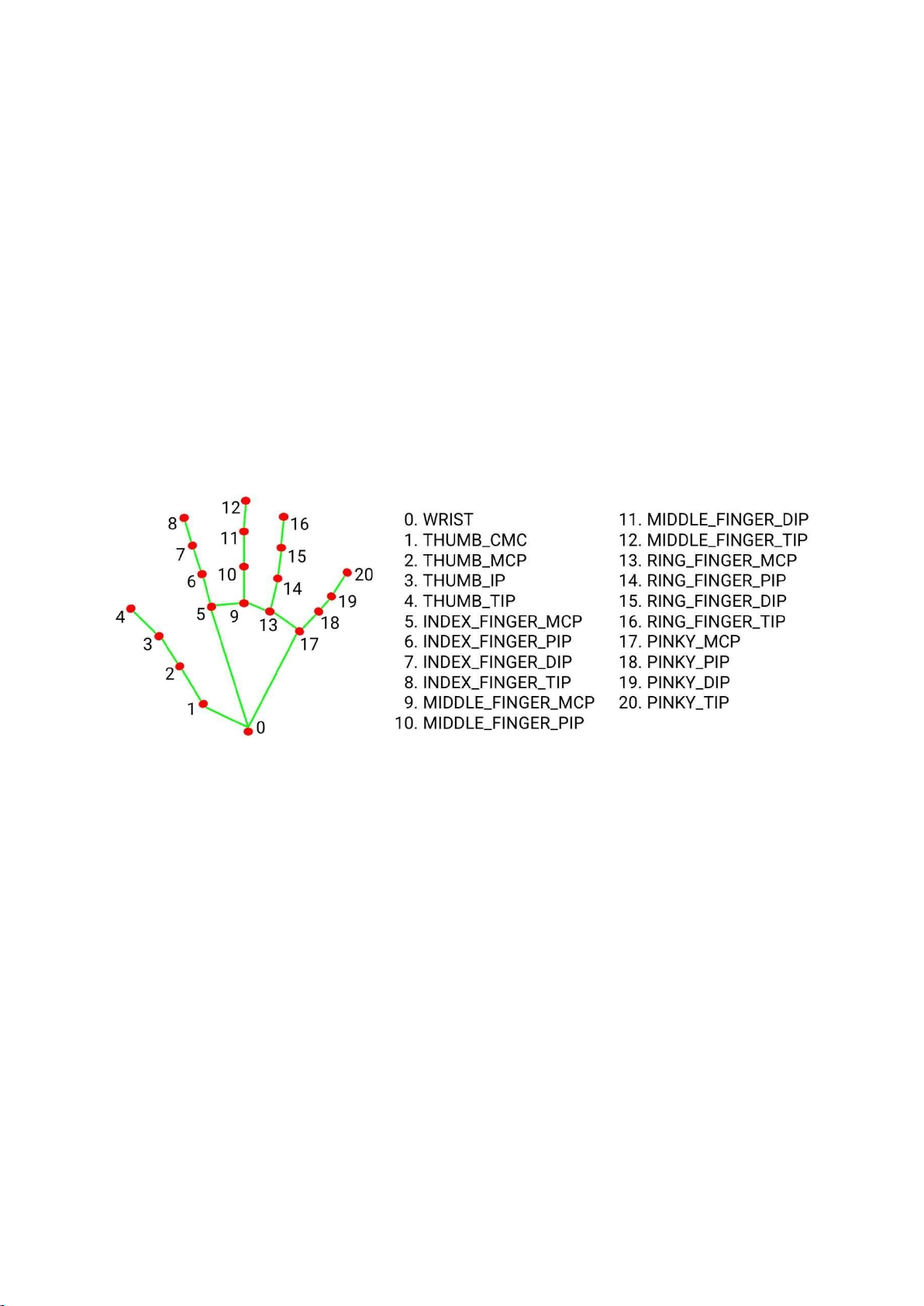

- (overview MediaPipe) MediaPipe Hands is a high-昀椀 delity hand

and 昀椀 nger tracking solu 琀椀 on. It employs machine learning

(ML) to infer 21 3D landmarks of a hand from just a single frame.

Whereas current state-of-the-art approaches rely primarily on

powerful desktop environments for inference, our method achieves

real-琀椀 me performance on a mobile phone, and even scales to mul 琀椀 ple hands.

- ( phần ML Mediapipe) MediaPipe Hands u 琀椀 lizes an ML pipeline

consis 琀椀 ng of mul 琀椀 ple models working together: A palm

detec 琀椀 on model that operates on the full image and returns an

oriented hand bounding box. A hand landmark model that operates

on the cropped image region de 昀椀 ned by the palm detector and

returns high-昀椀 delity 3D hand keypoints

- Providing the accurately cropped hand image to the hand landmark

model dras 琀椀 cally reduces the need for data augmenta 琀椀 on

(e.g. rota 琀椀 ons, transla 琀椀 on and scale) and instead allows

the network to dedicate most of its capacity towards coordinate

predic 琀椀 on accuracy. In addi 琀椀 on, in our pipeline the crops

can also be generated based on the hand landmarks iden 琀椀昀椀

ed in the previous frame, and only when the landmark model could

no longer iden 琀椀 fy hand presence is palm detec 琀椀 on

invoked to relocalize the hand

- The pipeline is implemented as a MediaPipe graph that uses a hand

landmark tracking subgraph from the hand landmark module, and

renders using a dedicated hand renderer subgraph. The hand

landmark tracking subgraph internally uses a hand landmark lOMoARcPSD| 36067889 Python Project

subgraph from the same module and a palm detec 琀椀 on

subgraph from the palm detec 琀椀 on module.

- (Palm Detec 琀椀 on Model) To detect ini 琀椀 al hand loca 琀椀

ons, we designed a single-shot detector model op 琀椀 mized for

mobile real 琀椀 me uses in a manner similar to the face detec 琀椀

on model in MediaPipe Face Mesh. Detec 琀椀 ng hands is a

decidedly complex task: our lite model and full model have to work

across a variety of hand sizes with a large scale span (~20x) rela 琀 椀 ve to the image frame and

be able to detect occluded and self-occluded hands. Whereas faces

have high contrast pa 琀琀 erns, e.g., in the eye and mouth region,

the lack of such features in hands makes it compara 琀椀 vely di 昀

케cult to detect them reliably from their visual features alone.

Instead, providing addi 琀椀 onal context, like arm, body, or person

features, aids accurate hand localiza 琀椀 on.

- Our method addresses the above challenges using di 昀昀 erent

strategies. First, we train a palm detector instead of a hand

detector, since es 琀椀 ma 琀椀 ng bounding boxes of rigid objects

like palms and 昀椀 sts is signi 昀椀 cantly simpler than detec 琀椀

ng hands with ar 琀椀 culated 昀椀 ngers. In addi 琀椀 on, as palms

are smaller objects, the non-maximum suppression algorithm works

well even for two-hand self-occlusion cases, like handshakes.

Moreover, palms can be modelled using square bounding boxes

(anchors in ML terminology) ignoring other aspect ra 琀椀 os, and

therefore reducing the number of anchors by a factor of 3-5.

Second, an encoder-decoder feature extractor is used for bigger

scene context awareness even for small objects (similar to the Re 琀

椀 naNet approach). Lastly, we minimize the focal loss during

training to support a large amount of anchors resul 琀椀 ng from the high scale variance.

- ( Hand LandMark Model) A 昀琀 er the palm detec 琀椀 on over

the whole image our subsequent hand landmark model performs lOMoARcPSD| 36067889 Python Project

precise keypoint localiza 琀椀 on of 21 3D hand-knuckle

coordinates inside the detected hand regions via regression, that is

direct coordinate predic 琀椀 on. The model learns a consistent

internal hand pose representa 琀椀 on and is robust even to par 琀

椀 ally visible hands and selfocclusions. To obtain ground truth data,

we have manually annotated ~30K real-world images with 21 3D

coordinates, as shown below (we take Z-value from image depth

map, if it exists per corresponding coordinate). To be 琀琀 er cover

the possible hand poses and provide addi 琀椀 onal supervision on

the nature of hand geometry, we also render a high-quality synthe

琀椀 c hand model over various backgrounds and map it to the corresponding 3D coordinates.

Fig 1__.21 hand landmarks. lOMoARcPSD| 36067889 Python Project

Fig 2__Top: Aligned hand crops passed to the tracking network with ground truth annota

琀椀 on. Bo 琀琀 om: Rendered synthe 琀椀 c hand images with ground truth annota 琀 椀 on.

III.HandTracking Applica 琀椀 on in Computer Driver A. Gesture-based Control

1. Introduc 琀椀 on of Gesture-base Control

- Gesture-based control is an important part of developing hand

tracking on computer drivers. It allows users to interact with a

computer using gestures instead of using a keyboard, mouse, or

other device input systems. This can make computer control more

convenient and interac 琀椀 ve, especially in cases where the user

is unwilling or unable to use tradi 琀椀 onal equipment.

2. Classi 昀椀 ca 琀椀 on of Hand Gestures

- Our applica 琀椀 on supports many types of hand gestures to

control the computer. Gestures include:

+ Swipe: Users can swipe their 昀椀 nger across the screen to scroll a web page or app.

+ Click: Click on the screen to select or perform speci 昀椀 c ac 琀椀 ons such as opening an app. lOMoARcPSD| 36067889 Python Project 3. Implement Hand Gestures

- We implemented hand tracking to recognize and understand user

hand gestures. When users perform gestures like swiping, clicking,

or dragging, hand tracking learns and converts them into

corresponding compu 琀椀 ng tasks. For example, when a user

thumbs, the computer will naturally move to the le 昀琀 of a

website or applica 琀椀 on. When they click on a speci 昀椀 c loca

琀椀 on on the screen, the computer performs an ac 琀椀 on

related to that loca 琀椀 on. 4. Performance and Results

- We tested the performance of gesture-based controls in our app

and achieved remarkable results. The accuracy in recognizing hand

gestures has reached a high level, and the reac 琀椀 on speed

makes interac 琀椀 ng with the computer smoother and more

natural. Users can easily perform tasks and control the computer with just hand gestures

5. Challenges and Solu 琀椀 ons

- During the implementa 琀椀 on of gesture-based controls, we

encountered some challenges, especially in dis 琀椀 nguishing

between hand gestures and accidental interac 琀椀 ons. To solve

this problem, we used hand gesture analysis and context iden 琀椀

昀椀 ca 琀椀 on algorithms to ensure ac 琀椀 ons are only triggered when needed.

6. Development Direc 琀椀 on and Improvement

- We recommend con 琀椀 nued research and development of

gesturebased controls to improve performance and interac 琀椀

on. Improving gesture recogni 琀椀 on and similar interac 琀椀 ons

by adding new and more 昀氀 exible gestures could be a poten 琀

椀 al direc 琀椀 on for future development.

7. Conclusion - Sec 琀椀 on "A. Gesture-Based Control" described how

hand tracking was implemented to control the computer through hand

gestures. It provides informa 琀椀 on about how hand gestures are lOMoARcPSD| 36067889 Python Project

classi 昀椀 ed, implemented, and behave in our applica 琀椀 on. This

sec 琀椀 on also highlights the performance, challenges, and

development direc 琀椀 ons related to gesture-based controls.

IV.Implemen 琀椀 ng HandTracking

A. Choosing the Right HandTracking Hardware

1. Choosing the right hardware for a hand tracking system is an important

part of the declara 琀椀 ve development process. We reviewed a range

of hand tracking devices and technologies on the market. In this sec 琀

椀 on, we will discuss the hardware selec 琀椀 on process, including:

- Consider the commercially available equipment and custom

development op 琀椀 ons available on the market.

- Evaluate important factors such as accuracy, reac 琀椀 on speed,

and integra 琀椀 on with our applica 琀椀 on.

- Ensure that hardware is selected to meet system requirements and

op 琀椀 mal power supply for our declared development.

B. Selec 琀椀 ng the Appropriate So 昀琀 ware Development Kit (SDK)

1. Choosing a suitable SDK is important to develop and integrate hand

tracking into our applica 琀椀 on. In this sec 琀椀 on, we will describe choosing an SDK, including:

- Consider hand tracking SDKs provided by hardware manufacturers or third par 琀椀 es.

- Evaluate the SDK's ability to support speci 昀椀 c features we're

developing, such as hand gesture recogni 琀椀 on and interac 琀椀 ons.

- Interact and test with the SDK to ensure smooth integra 琀椀 on

and op 琀椀 mized performance.

C. Con 昀椀 guring and Calibra 琀椀 ng Hand Tracking Systems

1. Con 昀椀 guring and calibra 琀椀 ng a hand tracking system is an

important part of ensuring that it operates accurately and reliably. In lOMoARcPSD| 36067889 Python Project

this sec 琀椀 on, we will discuss system con 昀椀 gura 琀椀 on and calibra 琀椀 on, including:

- Ini 琀椀 al con 昀椀 gura 琀椀 on steps to connect the hand

tracking hardware to our computer or device.

- Calibra 琀椀 on procedure to ensure that the system recognizes

hand gestures and posi 琀椀 ons accurately.

- Tests and adjustments to ensure that hand tracking works well in

real-world usage environments.

V. Tes 琀椀 ng and Debugging

A. Tes 琀椀 ng Hand Tracking Performance

1. Tes 琀椀 ng hand tracking performance is an important step in the

implementa 琀椀 on process. We perform many types of tests to ensure

that hand tracking works as expected. This sec 琀椀 on includes:

- Accuracy Tes 琀椀 ng: We conduct tests to evaluate the accuracy of

hand tracking in recognizing hand gestures and posi 琀椀 ons.

- Test Response Speed: We ensure that hand tracking responds

quickly and smoothly, to ensure that users have a good experience.

- Performance Test: We evaluate hand tracking performance under a

variety of scenarios, including fast interac 琀椀 ons and mul 琀椀 tasking B. Debugging Common Issues

During deployment, we encountered some common issues and needed

to perform debugging to 昀椀 x them. This sec 琀椀 on includes:

- Error Classi 昀椀 ca 琀椀 on And Handling: We list the common

errors we encountered and resolve them in detail. This includes

issues of accuracy, speed and performance.

- Use of Debug Tools: We use debugging and monitoring tools to

monitor performance and 昀椀 nd problems if any.

- Real-Time Error Handling: We look at real-琀椀 me error handling

to ensure that hand tracking always works well, even when problems occur.. lOMoARcPSD| 36067889 Python Project

C. Performance Op 琀椀 miza 琀椀 on

1. Performance op 琀椀 miza 琀椀 on is an important part of hand

tracking implementa 琀椀 on. We learn how to improve performance to

ensure that our applica 琀椀 ons run smoothly and e 昀케ciently. This sec 琀椀 on includes:

- Improving the Algorithm: We look at how to improve the algorithm

to reduce resource requirements and increase speed.

- Resource Op 琀椀 miza 琀椀 on: We consider op 琀椀 mizing

compu 琀椀 ng resources to ensure that hand tracking does not put a strain on the system.

- Real-Time Tes 琀椀 ng: We monitor performance in real-琀椀 me

and make adjustments to op 琀椀 mize performance. VI.User Experience Design

A. Design Principles for Hand Tracking 1. Precision and Accuracy

When designing interac 琀椀 on using hand gestures, the accuracy and

reliability of hand tracking are important factors. We consider how hand

gestures are displayed and interpreted to ensure that users can perform

gestures correctly. This includes using pixels or 3D images to create precise gestures. 2. Natural Gestures

We designed the interface so that users' hand gestures are natural

and enjoyable. This includes crea 琀椀 ng gestures based on the

user's everyday behavior, such as swipe, squeeze, or pull gestures.

This helps create an accessible and user-friendly interac 琀椀 ve experience. 3. Intui 琀椀 ve Feedback

Our design principles include providing visual and audio feedback

so users clearly know when they have performed a successful

gesture. This helps create a smoother and easier to understand

interac 琀椀 ve experience. We also looked at how to represent lOMoARcPSD| 36067889 Python Project

informa 琀椀 on with images and graphs to help users monitor their condi 琀椀 on.

B. User-Centered Design for Computer Drivers

1. User Research and Pro 昀椀 ling

In designing the computer drivers interface, we start by gathering

informa 琀椀 on about the intended users and crea 琀椀 ng an

image of them. We conducted interviews and surveys to understand

their needs and desires in controlling computers with hand gestures.

2. User-Centric Interac 琀椀 on Op 琀椀 ons

We design interac 琀椀 on op 琀椀 ons based on the user to ensure

that they can customize how they interact. This includes considera

琀椀 ons about how to switch between interac 琀椀 on modes,

customize se 琀 ngs, and integrate with other applica 琀椀 ons and so 昀琀 ware. 3. Enhanced User Experience

We always focus on crea 琀椀 ng a good user experience. We op 琀椀

mize performance and interac 琀椀 vity, while providing a user-friendly and

easy-touse interface. We also create educa 琀椀 onal and support documenta

琀 椀 on to help users get the most out of hand tracking. C. Feedback Mechanisms 1. Visual Feedback

Visual feedback is an important part of hand tracking design. We use

elements such as charts, images and icons to represent informa 琀椀

on to users. This helps them understand the state of their engagement. 2. Audio Feedback

Sound is also an important part of feedback. We provide sounds

when users perform successful gestures, helping them iden 琀椀 fy

interac 琀椀 on status easily. 3. Hap 琀椀 c Feedback lOMoARcPSD| 36067889 Python Project

- We consider the use of hap 琀椀 cs feedback, speci 昀椀 cally

the use of vibra 琀椀 on and tac 琀椀 le sensa 琀椀 ons to

provide feedback on the user's hand gestures. This can help

create a more enjoyable interac 琀椀 ve experience

- This sec 琀椀 on creates a detailed framework for user

experience design, design principles, and feedback mechanisms

for implemen 琀椀 ng hand tracking. VII. Case Study

This project is using HandTracking to do a mouse simula 琀椀 on system

which performs all the func 琀椀 ons performed by your mouse corresponding

to your hand movements and gestures. Simply speaking, a camera captures

your video and depending on your hand gestures, you can move the cursor and

perform le 昀琀 click, right click, drag, select and scroll up and down. The prede

昀椀 ned gestures make use of only three 昀椀 ngers marked by di 昀昀 erent colors A. Introduc 琀椀 on:

- The project “Mouse control using Hand Gestures” is developed

aiming to be 琀琀 er the process of human-computer interac

琀椀 on. It aims to provide the user a be 琀琀 er

understanding of the system and to let them use alternate

ways of interac 琀椀 ng with the computer for a task.

- The task here is to control the mouse even from a distance just

by using hand gestures. It uses a program in python and

various libraries such as Autopy, HandTrackingModule, Numpy

and image processing module OpenCV to read a video feed

which iden 琀椀昀椀 es the users’ 昀椀 ngers represented by

three di 昀昀 erent colors and track their movements. It

retrieves necessary data and implements it to the mouse

interface of the computer according to prede 昀椀 ned no 琀 椀 ons. lOMoARcPSD| 36067889 Python Project

- The project can be useful for various professional and

nonprofessional presenta 琀椀 ons. It can also be used at

home by users for recrea 琀椀 onal purposes like while

watching movies or playing games. B. Mo 琀椀 va 琀椀 on:

- Aim :The project’s primary aim is to improve the scope of

human and computer interac 琀椀 on by developing an e 昀昀

ec 琀椀 ve alterna 琀椀 ve way of controlling the mouse

pointer and its various func 琀椀 ons such as click, move . It

helps user interact with the computer from a considerable

distance without any issue and e 昀케ciently without actually

touching the mouse. It also decreases the hardware

requirement for the interac 琀椀 on by elimina 琀椀 ng the

necessity of a mouse. All the user needs is a web camera

(which is mostly present in all laptops these days) which can record real-琀椀 me videos

- Objec 琀椀 ve: The main objec 琀椀 ves of the project are as follows: a. Obtain input video feed

b. Retrieve useful data from the image to be used as input

c. Filter the image and iden 琀椀 fy di 昀昀 erent colors.

d. Track the movement of colors in the video frame.

e. Implement it to the mouse interface of the computer

according to prede 昀椀 ned no 琀椀 ons for mouse pointer control - Features:

a. Input image processing - Processing includes image rota 琀椀

on, resizing, normaliza 琀椀 on, and color space conversion.

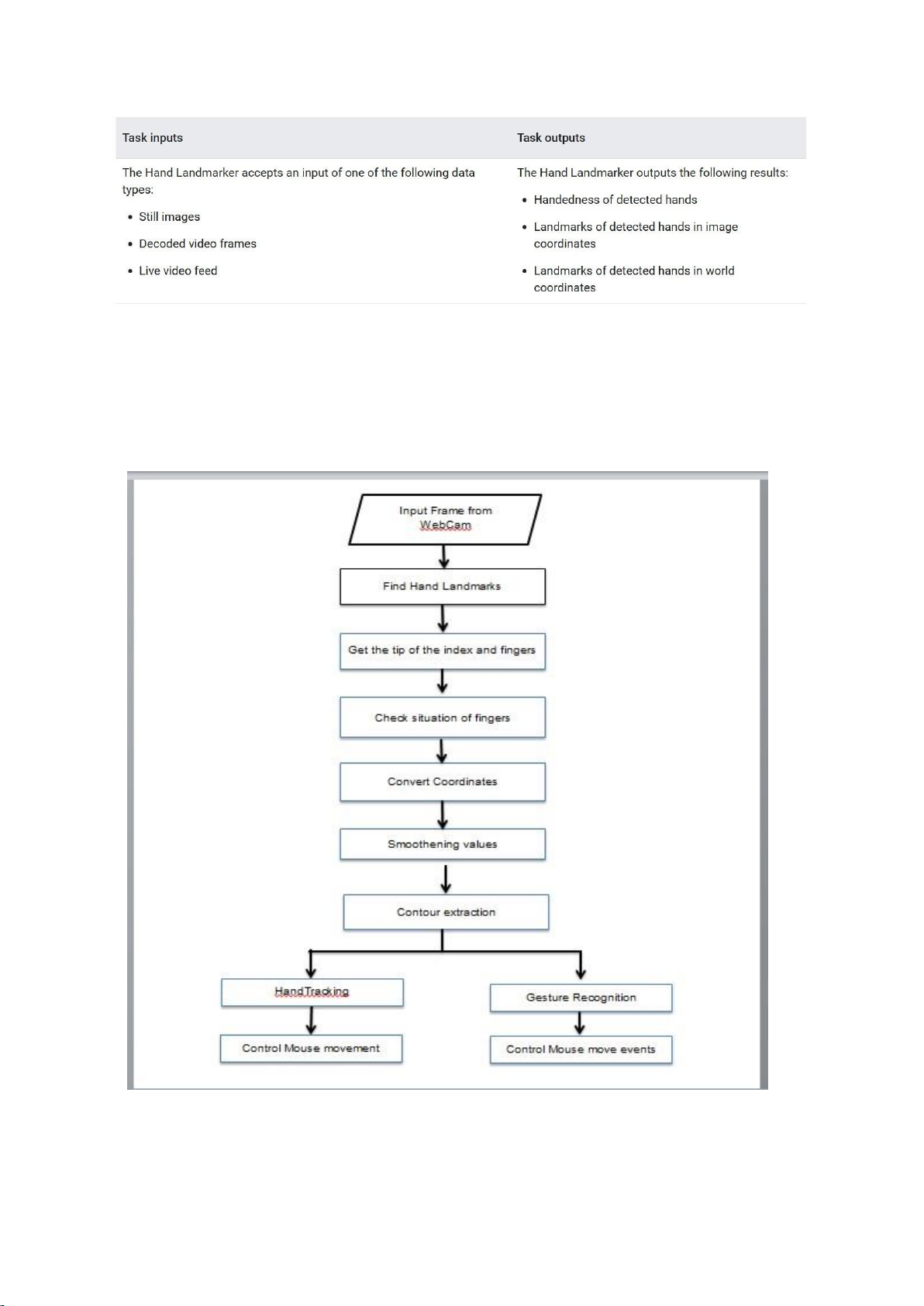

b. Score threshold - Filter results based on predic 琀椀 on scores. lOMoARcPSD| 36067889 Python Project 2. Methodlogy: - Framework Architecture:

a. The algorithm for the en 琀椀 re system is shown in Figure below: lOMoARcPSD| 36067889 Python Project

To do HandTracking program e 昀昀 ec 琀椀 vely , we 昀椀 rst have to

get hand images from the camera and then assign the Landmark

handtracking model to the points in the hand. We use the index 昀椀

nger with a landmark point of 8 to assign the mouse movement and

both the index and middle 昀椀 ngers to assign the click opera 琀椀

on. With mouse movement, we iden 琀椀 fy the coordinates of the

index 昀椀 nger on the image and then transmit data to the mouse to

perform the corresponding work. The click opera 琀椀 on is a bit di 昀

昀 erent, as we need to calculate the distance between LandMark

number 8 and number 12 on the hand. When the distance between

those two points is less than a certain threshold, the mouse will

perform a click opera 琀椀 on. However, when implemen 琀椀 ng the

program we encountered the following problems:

- The 昀椀 rst is the inversion of the image: because when the

camera captures the image and displays it on the screen, the image

on the screen is 昀氀 ipped compared to the actual image, real-life

opera 琀椀 ons when performed on the computer. will be reversed.

Because of this, we have to 昀氀 ip that: img = cv2.昀氀 ip(img, 1)

- The second is that the hand will not be recognized when the hand

moves too low compared to the camera. Our solu 琀椀 on to this

problem is to create a gesture space smaller than the screen and

map the hand coordinates to the mouse coordinates on the screen

- Third is that the camera is too sensi 琀椀 ve. The camera captures

images with a scanning frequency that is too high, so when hands

shake or similar problems occur, it will cause inaccuracies. What

needs to be done to solve the above problem is to dilute the

camera's image recording frequency or using sta 琀椀 c point to

compare with speci 昀椀 ed point lOMoARcPSD| 36067889 Python Project

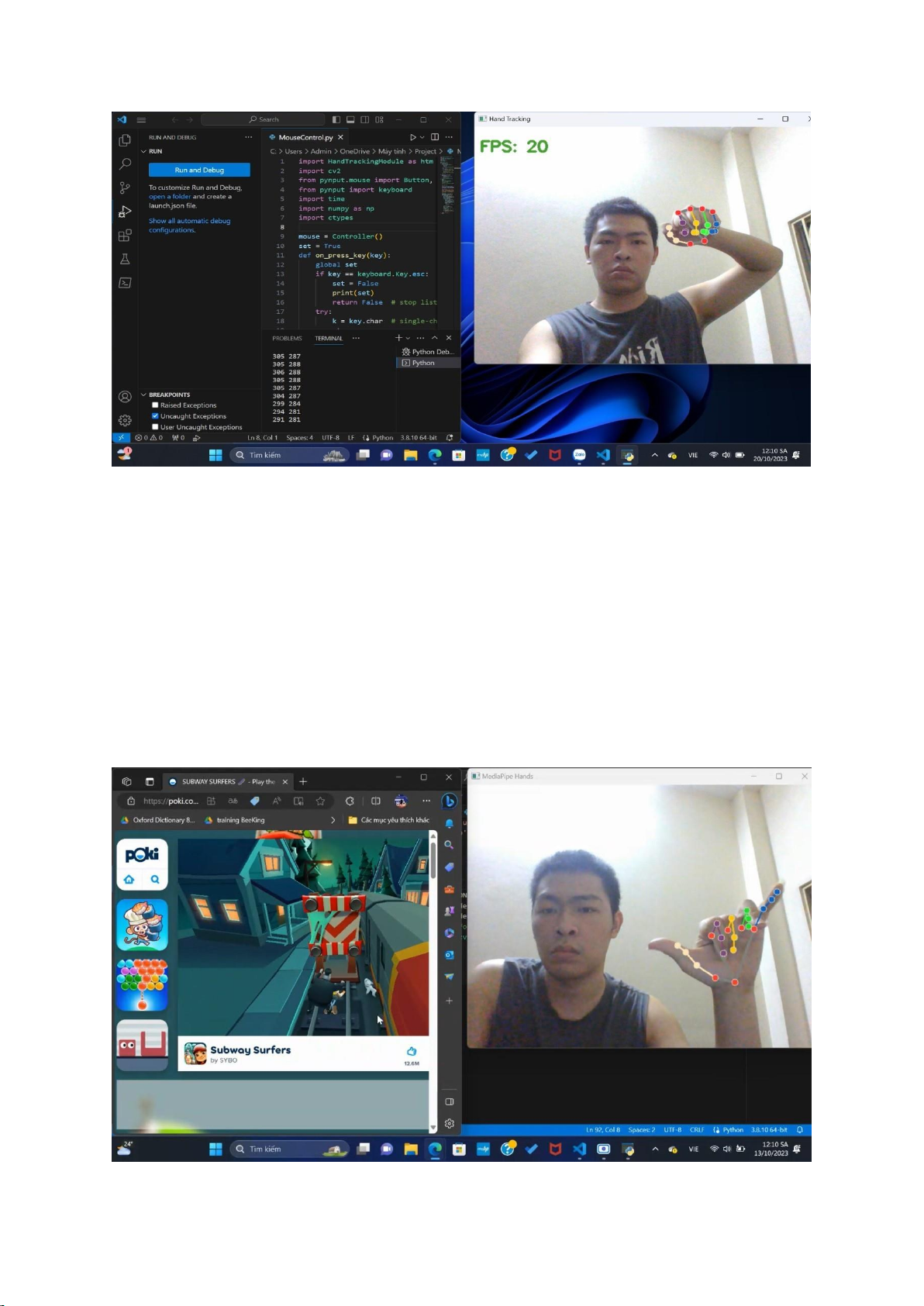

昀椀 g 3. Using handtracking to simulate mouse ac 琀椀 on

Not only used to control the mouse, HandTracking can also do other

simple opera 琀椀 ons such as playing games with simple movements

(moving in regular straight lines) for example: subwaysu 昀昀 er,

pacman,... By assigning those simple gestures to the coordinates of

landmark points, or games that require more manipula 琀椀 on such as

angry bird, need for speed... By comparing the coordinates of landmark

points with each other, we can You can assign it to in-game ac 琀椀 ons lOMoARcPSD| 36067889 Python Project

昀椀 g 4. Using Handtracking to simulate primary direct in computer VIII. Future Trends and Challenges

A. Emerging Technologies in Hand Tracking

The 昀椀 eld of hand tracking is constantly evolving, with new

technologies and innova 琀椀 ons shaping its future. Emerging

technologies are pushing the boundaries of what hand tracking can

achieve. These include the integra 琀椀 on of ar 琀椀昀椀 cial intelligence

(AI) and machine learning to enhance the precision and robustness of hand

tracking systems. Machine learning models can adapt to various hand

shapes, sizes, and poses, making hand tracking more accurate and versa 琀

椀 le. Addi 琀椀 onally, lightweight and lowpower hardware solu 琀椀 ons

are gaining trac 琀椀 on, enabling hand tracking to be seamlessly

integrated into smaller and ba 琀琀 ery-powered devices. Gesture recogni

琀椀 on techniques are also advancing, enabling more natural and intui 琀

椀 ve hand interac 琀椀 ons in virtual and augmented reality

environments. These emerging technologies are poised to have a signi 昀

椀 cant impact on various industries, from healthcare to gaming and beyond.

B. Security and Privacy Concerns

As hand tracking technology becomes more widespread, it brings with it

security and privacy concerns that must be addressed. Unauthorized

access to hand gesture data and biometric informa 琀椀 on is a growing

concern. Protec 琀椀 ng user data and ensuring its con 昀椀 den 琀椀

ality are paramount. Furthermore, the poten 琀椀 al misuse of gesture

data for malicious purposes, such as unauthorized access or iden 琀椀 ty

the 昀琀, raises red 昀氀 ags. To mi 琀椀 gate these concerns, robust

security measures and encryp 琀椀 on protocols need to be in place. User

consent and data anonymiza 琀椀 on are also cri 琀椀 cal components in safeguarding privacy. lOMoARcPSD| 36067889 Python Project

C. Scalability and Integra 琀椀 on Challenges

The scalability and integra 琀椀 on of hand tracking technology present

prac 琀椀 cal challenges in deploying it across diverse devices and applica

琀椀 ons. Ensuring compa 琀椀 bility with various hardware pla 琀昀

orms, screen sizes, and opera 琀椀 ng systems is essen 琀椀 al for

widespread adop 琀椀 on. Standardized applica 琀椀 on programming

interfaces (APIs) and development tools play a pivotal role in simplifying

integra 琀椀 on e 昀昀 orts. Addi 琀椀 onally, integra 琀椀 ng hand

tracking into exis 琀椀 ng systems, such as gaming, virtual reality, and

industrial applica 琀椀 ons, poses unique challenges. The need for

seamless integra 琀椀 on and adaptability to di 昀昀 erent use cases

underscores the importance of scalability and 昀氀 exibility in hand tracking solu 琀椀 ons.

Incorpora 琀椀 ng these considera 琀椀 ons into the development and

deployment of hand tracking technology will be vital for its success in the

ever-evolving technological landscape. IX. Conclusion A. Key Takeaways

In this comprehensive explora 琀椀 on of hand tracking in the context of

computer drivers, we have delved into the intricacies of this evolving

technology. Key takeaways from our journey include:

- Hand tracking has emerged as a transforma 琀椀 ve technology with a

wide range of applica 琀椀 ons in computer drivers, from improving user

interac 琀椀 on to enhancing accessibility features.

- The successful implementa 琀椀 on of hand tracking relies on a careful

selec 琀椀 on of hardware, so 昀琀 ware development kits, and thorough system calibra 琀椀 on. lOMoARcPSD| 36067889 Python Project

- Extensive tes 琀椀 ng, debugging, and performance op 琀椀 miza 琀椀

on are crucial steps in ensuring the e 昀昀 ec 琀椀 veness and reliability of hand tracking systems.

- User experience design plays a pivotal role in making hand tracking

intui 琀椀 ve and e 昀케cient, with design principles and user-centered approaches guiding the way.

- Real-world case studies have demonstrated the versa 琀椀 lity of hand

tracking, from replacing tradi 琀椀 onal input devices to enhancing in-car

infotainment systems and accessibility features.

- Emerging technologies are pushing the boundaries of hand tracking,

promising more precise and versa 琀椀 le applica 琀椀 ons across various industries.

- Security and privacy concerns require vigilant measures to protect user

data and prevent poten 琀椀 al misuse.

- Scalability and integra 琀椀 on challenges underscore the importance

of adaptability and compa 琀椀 bility in deploying hand tracking across di 昀

昀 erent pla 琀昀 orms and applica 琀椀 ons.

B. The Future of Hand Tracking in Computer Drivers 1.

The journey does not end here. As we look to the future, the trajectory

ofhand tracking in computer drivers is exci 琀椀 ng and promising. It is clear

that hand tracking will con 琀椀 nue to evolve, driven by technological

advancements and user demands. Its role in rede 昀椀 ning user interac 琀椀

on, accessibility, and convenience cannot be overstated. 2.

In the coming years, we an 琀椀 cipate:

- Further re 昀椀 nement of hand tracking algorithms, o 昀昀 ering even

greater accuracy and responsiveness.

- Expanding the scope of applica 琀椀 ons, from gaming and virtual

reality to industrial automa 琀椀 on and healthcare.

- Heightened security and privacy measures to safeguard user data.

- Enhanced compa 琀椀 bility and integra 琀椀 on with emerging

technologies, such as augmented reality and IoT. lOMoARcPSD| 36067889 Python Project

Hand tracking is well-posi 琀椀 oned to revolu 琀椀 onize the way we

interact with our computers and devices. As technology con 琀椀 nues to

advance, it is a thrilling journey to be part of, and we eagerly an 琀椀 cipate

the innova 琀椀 ons that lie ahead