Preview text:

My life as an educator at NUS Ganesh

• Masters & PhD from National University of Singapore (NUS)

• Several years in Industry/Academia

• Architect, Manager, Technology Evangelist, DevOps Lead

• Talks/workshops in America, Europe, Australia, Asia

• Software Engineering, Cloud Computing, Artificial

Intelligence, DevOps, Digital Humanities

• Holds many industry certifications

• Kathakali Dancer, Travel Vlogger, Speaker GANESHNIYER

http:/ ganeshniyer.github.io 3 4 • Agile Software • DevOps Engineering • Project Management STeAdS Software Engineering • Cloud Computing Technological and Technological • AI (Edge AI, ML/DL/GAN) Advancements Advancements for • Web/Mobile Society • Art & Culture Society • Healthcare • Education https://ganeshniyer.com/

Analysis of Student-LLM Interaction in a

Software Engineering Project

Agrawal Naman, Ridwan Shariffdeen, Guanlin Wang, Sanka Rasnayaka, Ganesh Neelakanta Iyer

School of Computing, National University of Singapore

International Conference in Software Engineering (ICSE 2025),

LLM4Code Workshop, Canada, April 2025 Naman

Ridwan Shariffdeen Guanlin Wang Sanka Rasnayaka Ganesh

International Conference in Software Engineering (ICSE 2025),

LLM4Code Workshop, Canada, April 2025 6 Background of Study 13-week long SE Project 126 students in teams of 6 LLM usage was encouraged

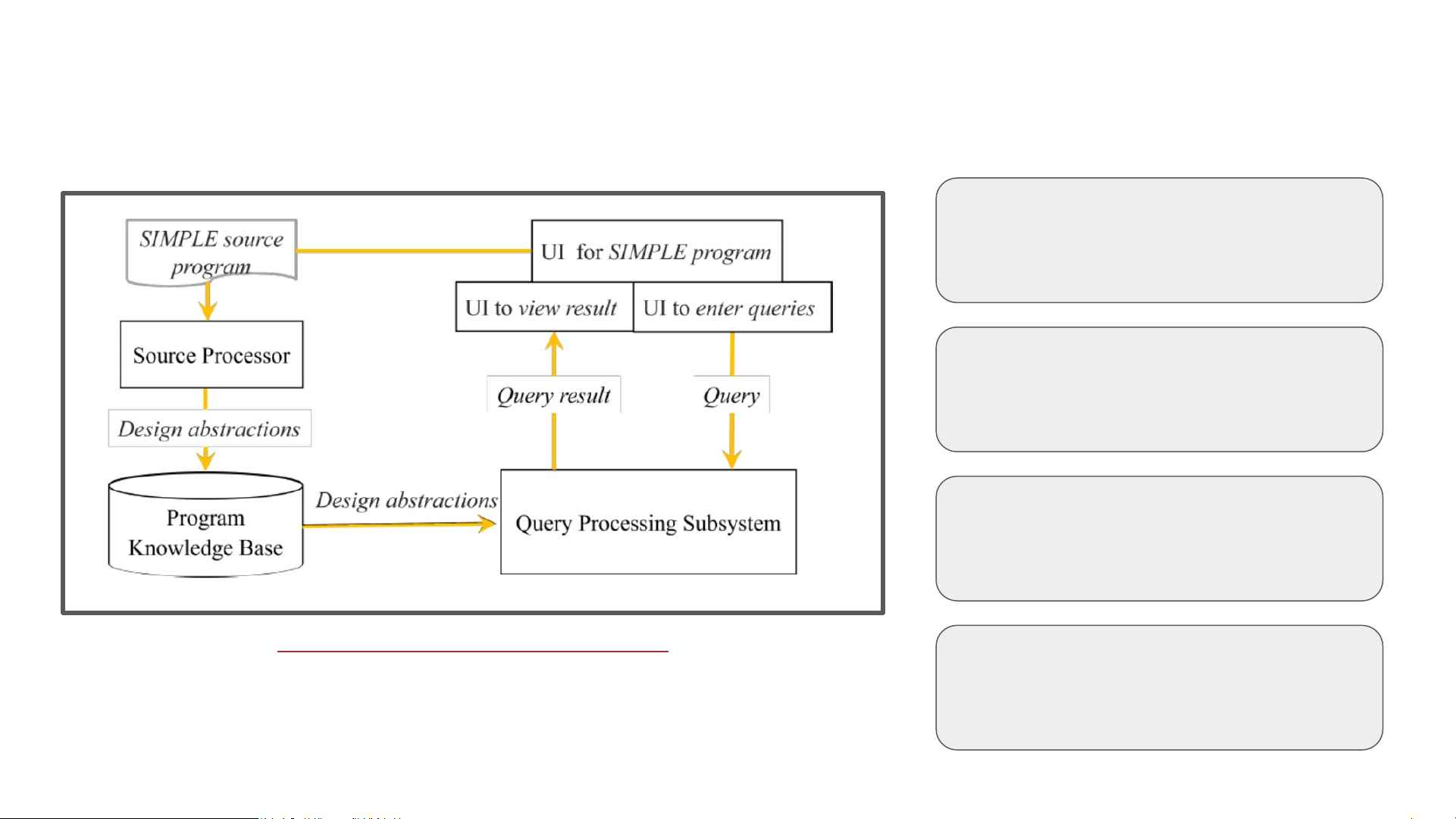

Architecture of the Static Programme Analyzer (SPA) Premium accounts provided 7 Research Questions

How does ChatGPT (conversational) and GitHub Copilot (autocomplete) compare?

How does the LLM-generated code evolve across the duration (3 milestones) of the Project?

Does the student-LLM interaction lead towards positive outcomes? 8 Data

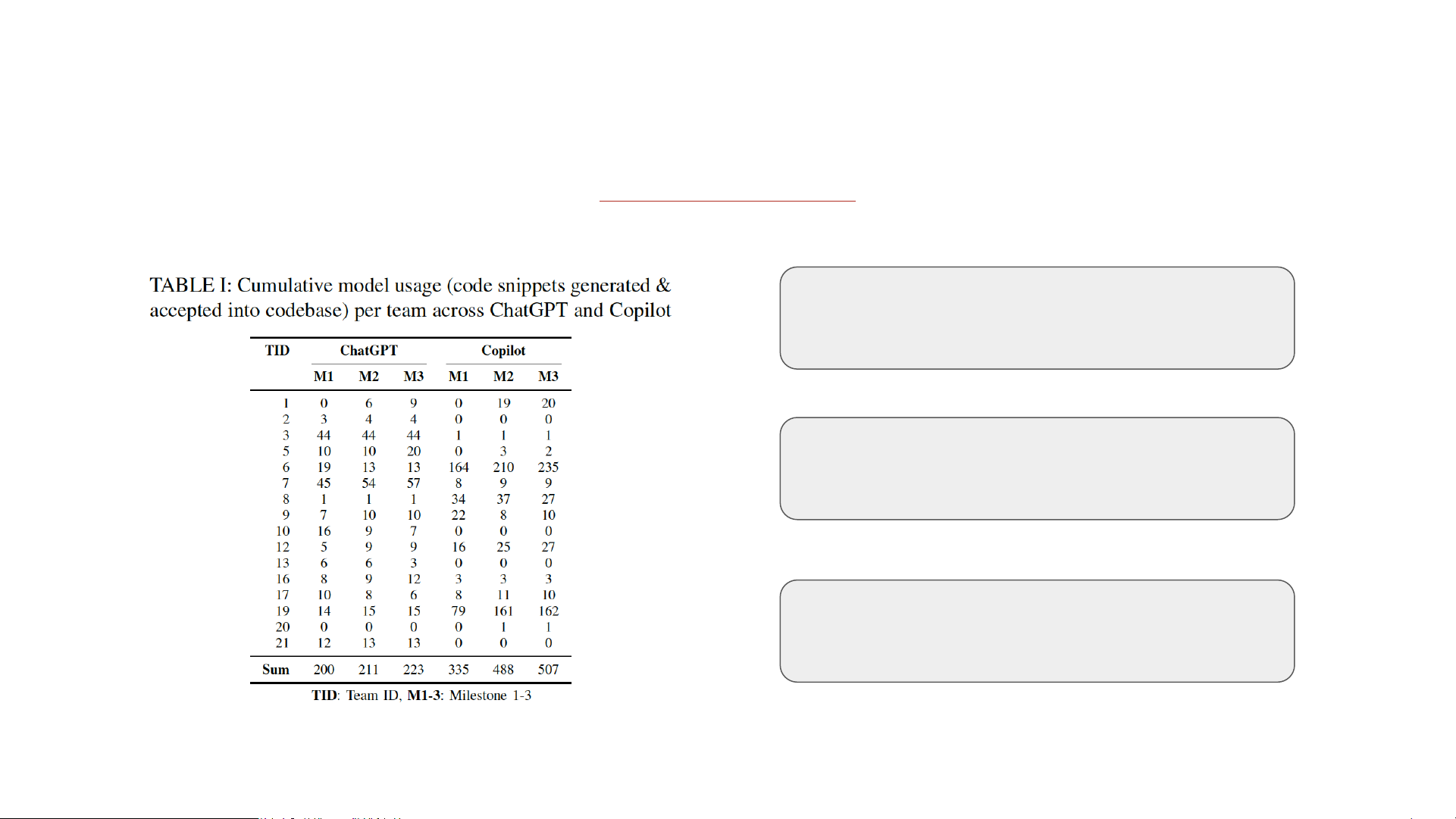

126 UG students across 21 teams

Project with three major deadlines 730 code snippets 62 ChatGPT conversations 582,117 lines of TOTAL code

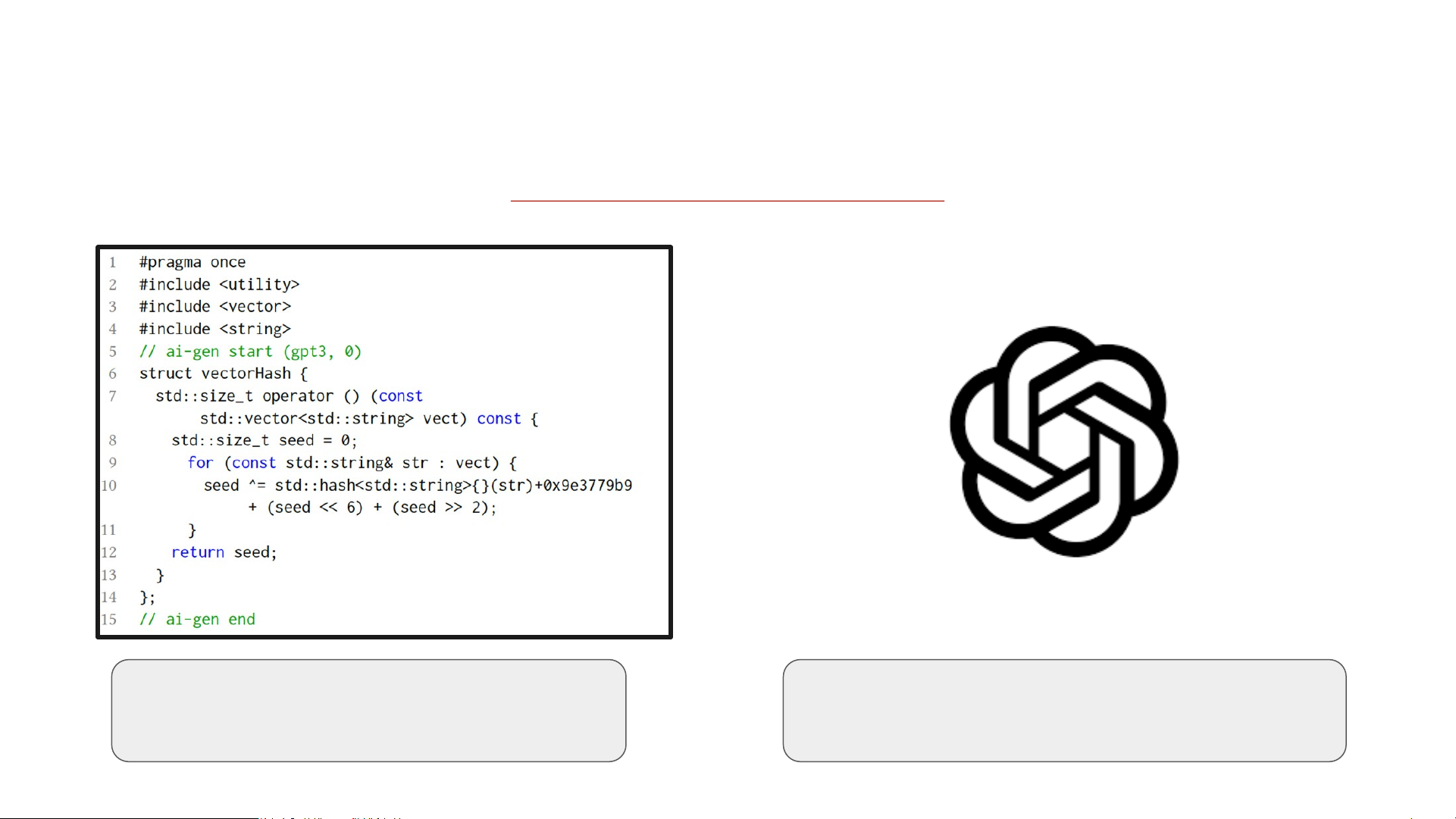

40,482 (~7%) of the code generated with the help of LLM 10 Methodology

Capturing LLM-generated code at each of the 3 Milestones

Custom tagging in the source code

Retrieving generated code snippets from the ChatGPT platform 11 Findings

Usages of LLMs across the project 730 Total Snippets

535 Snippets in the first milestone 507 Copilot Snippets in total 12

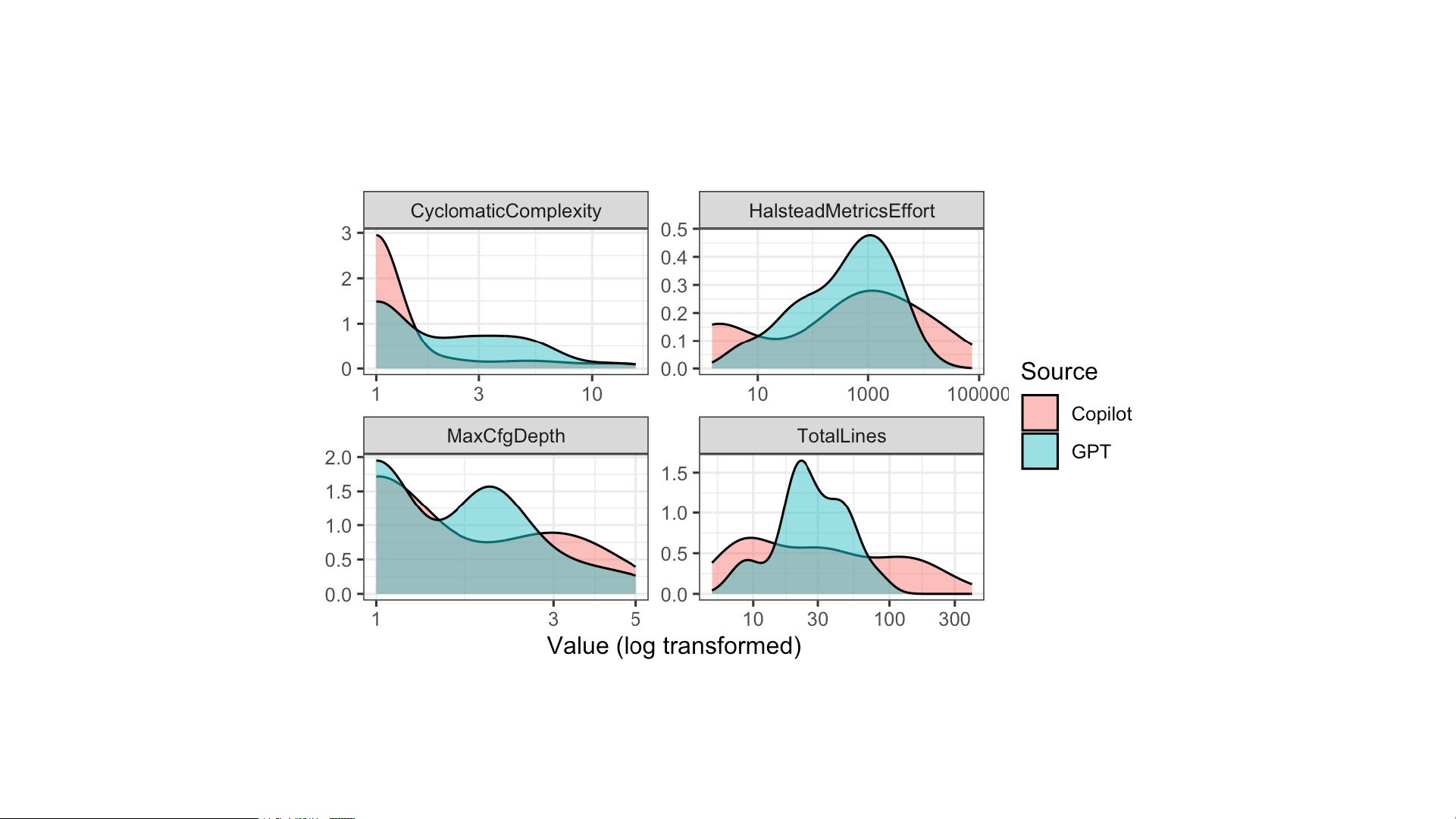

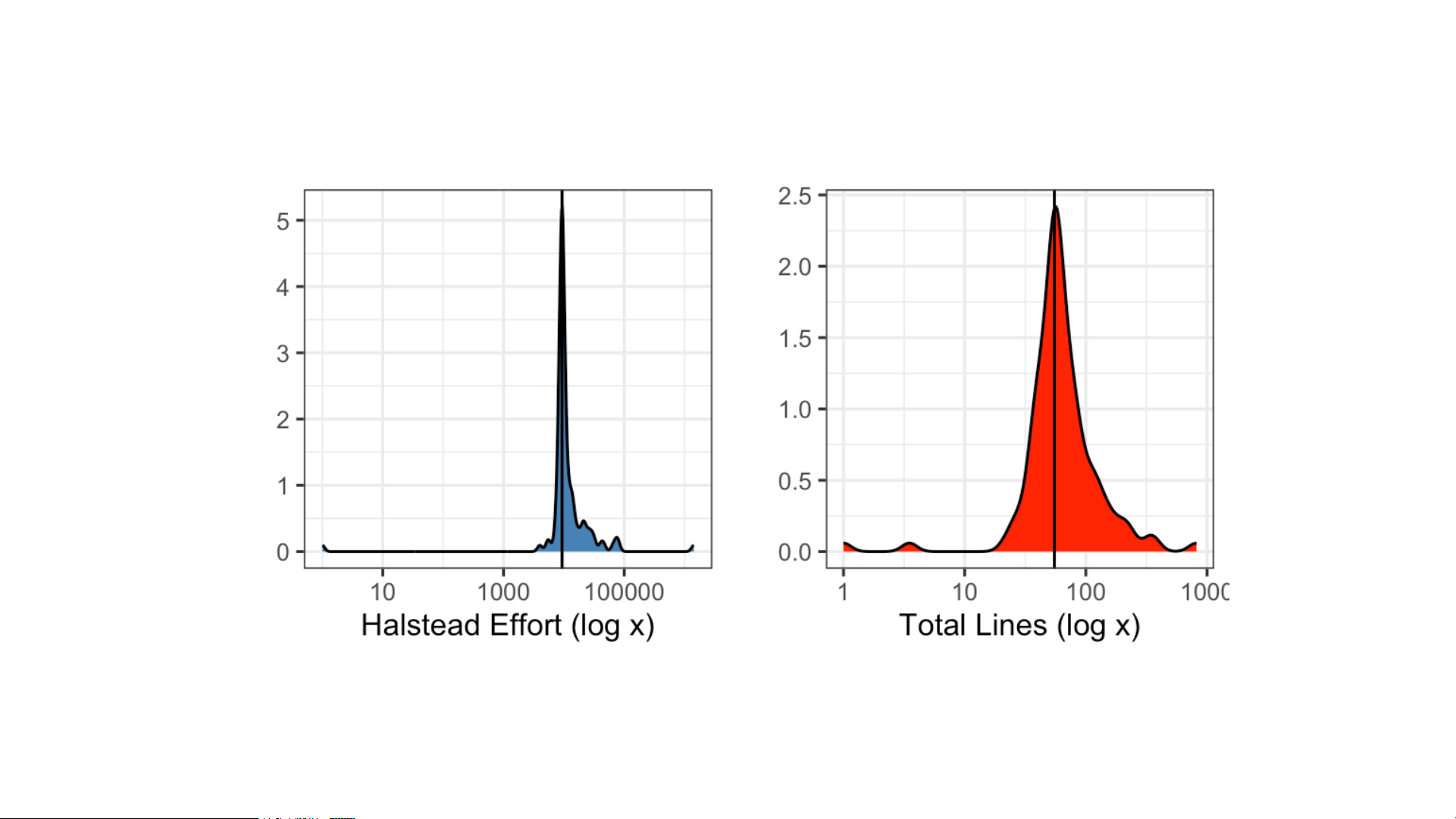

How complex is the LLM-generated code? Total Lines of Code (LOC) 01 ● Code verbosity Cyclomatic Complexity 02 ●

Measures the number of linearly independent paths within the code. Maximum Control Flow Graph 03 ●

Measures depth of nested structures within (CFG) Depth code. Halstead Effort 04 ●

Mental effort required to understand and modify the code. 13

A.1 Complexity Analysis Result 1: Copilot vs ChatGPT ity lity Dens Probabi

Figure 1: Density Plot for measured key metrics

Copilot-generated code tends to be more complex, sometimes significantly exceeding typical student-generated complexity levels. 14

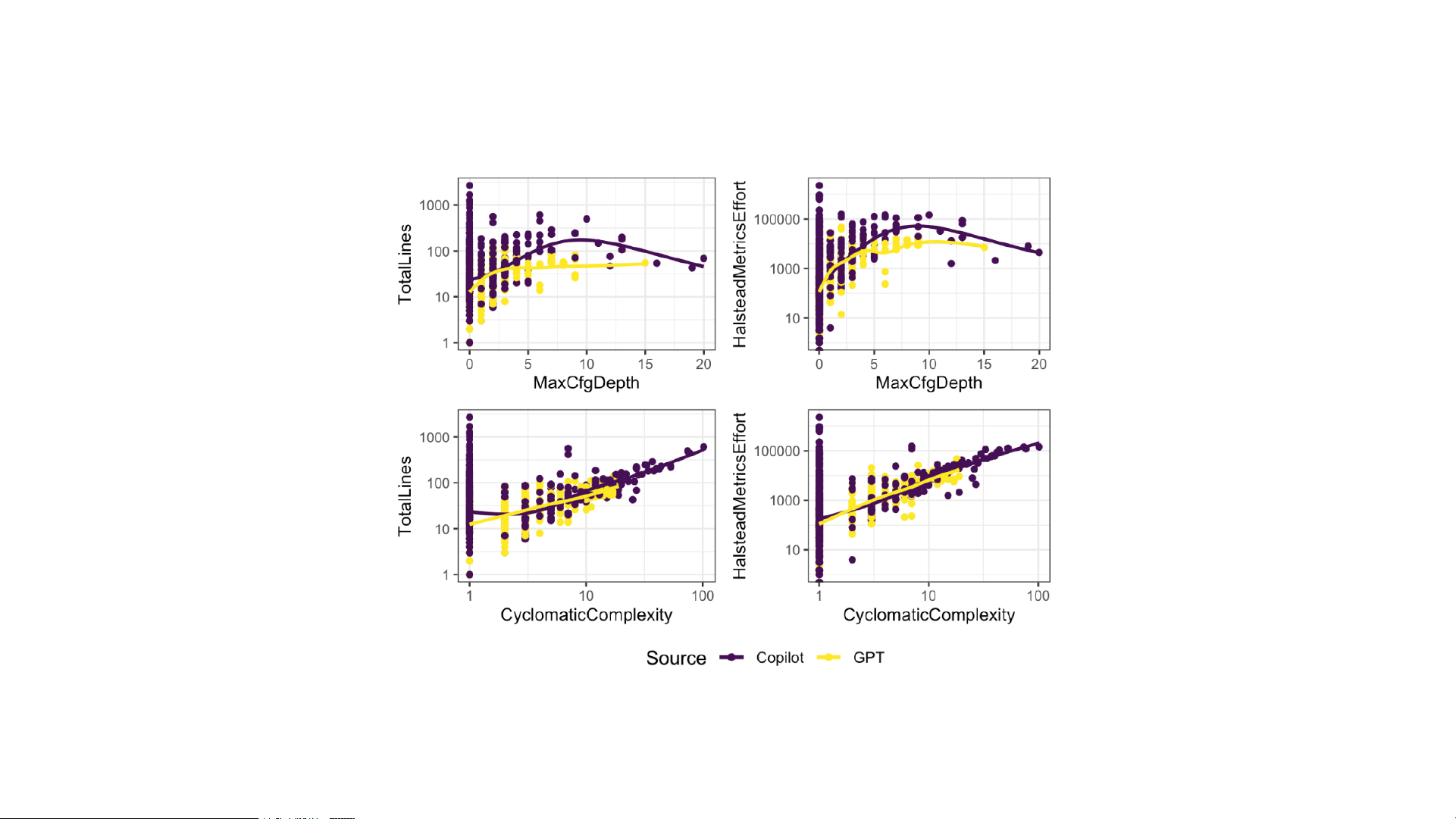

A.1 Complexity Analysis: Result 1: Copilot vs ChatGPT

Figure 2: Comparison of ChatGPT and Copilot Complexity Across Various Complexity Measures

ChatGPT produces more concise and readable code than Copilot, requiring lower cognitive effort for comprehension. 15

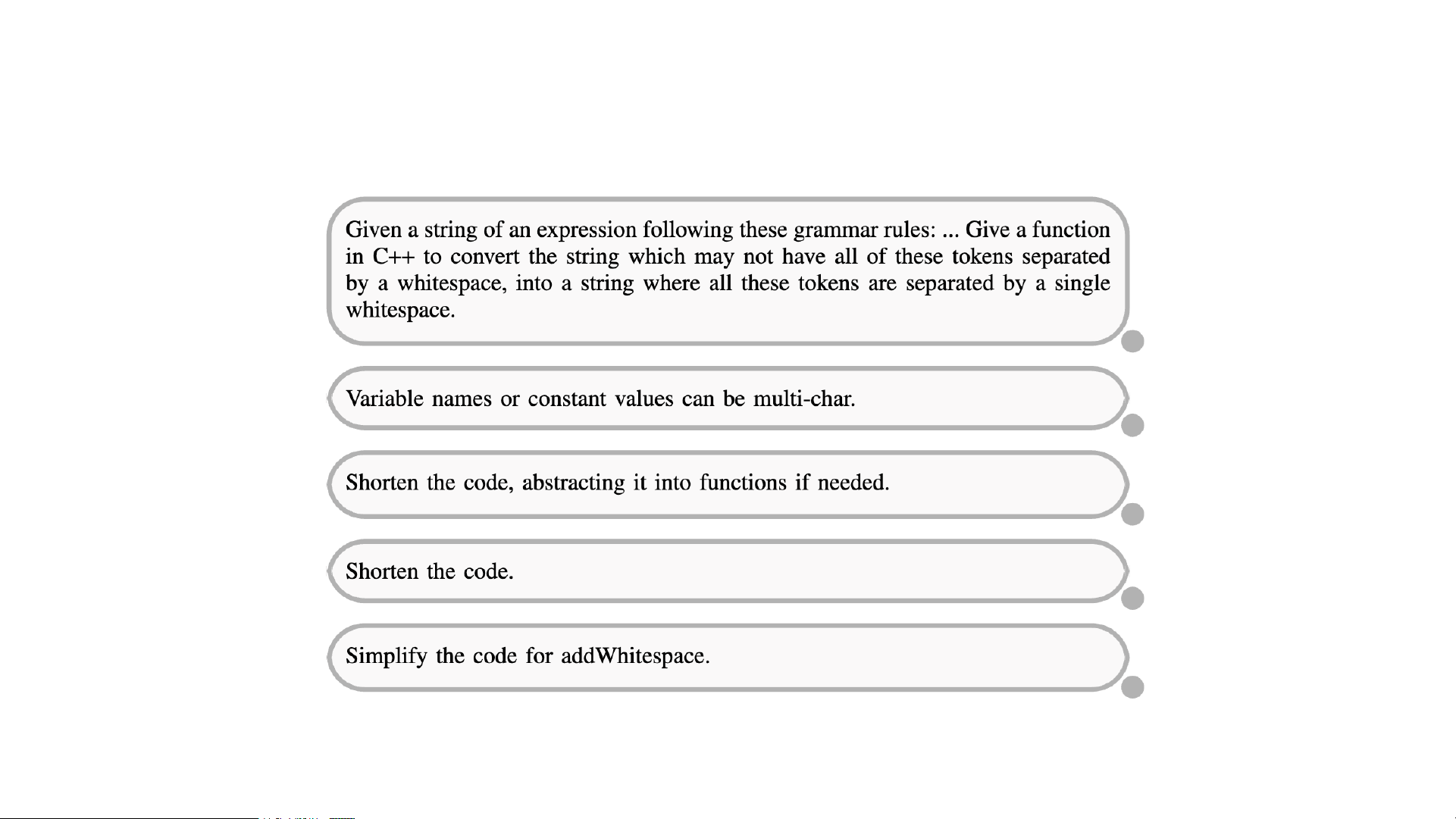

A.2 Complexity Analysis Result 2: Iterative Refinement

Example: ChatGPT’s conversational interface enables students to iteratively refine code, making it more concise and modular. 16

How was the generated code used in the Project? Extent of Code Modification 01 ●

How much do students alter AI-generated code? ●

Do they add new functionality, simplify for readability, or

restructure it to fit project requirements? Code Similarity Trends over 02 ●

Does reliance on ChatGPT change over time? Milestones ●

Do students modify AI-generated code less as they progress? ●

How do students interact with ChatGPT across multiple In-depth Analysis of 03 prompts? Conversations ●

Do they refine code gradually, or is the most useful

version generated early in the conversation? 17

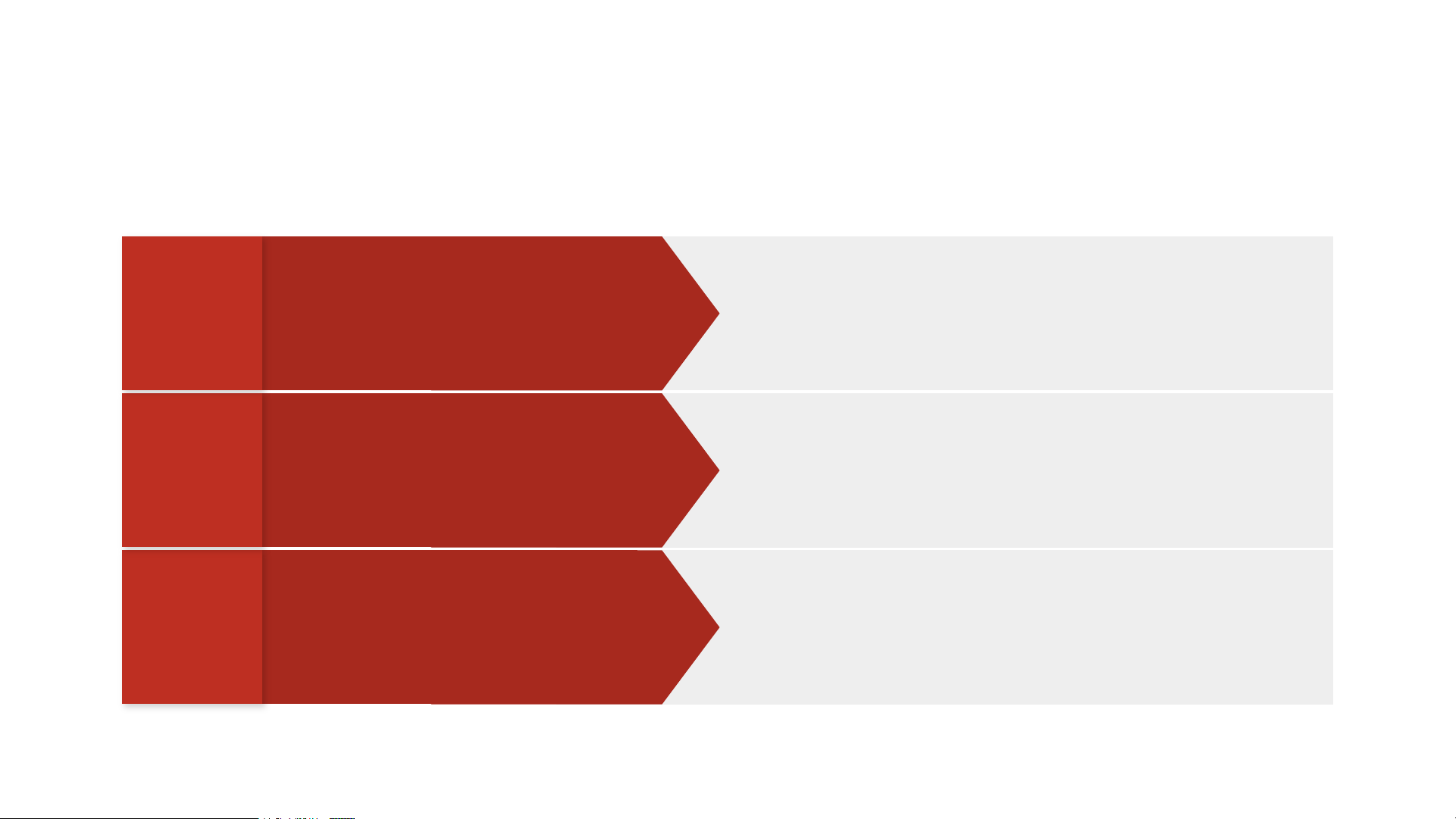

B.1 Extent of Code Modification ity lity Dens Probabi

Figure 4: Distribution of Difference Complexity Measures between Repo and GPT Code with Log Transformed x-axis

Students frequently increase the complexity of ChatGPT-generated code, refining and expanding it for project needs. 18

B.2 Code Similarity Trends over Milestones: Metrics Jaccard Similarity 01

Measures the overlap between two sets of

extracted structural elements using Tree- sitter.

Captures the longest matching sequence Longest Common 02

of tokens between code snippets, with Subsequence (LCS)

pairs above 90% similarity considered equivalent. 19

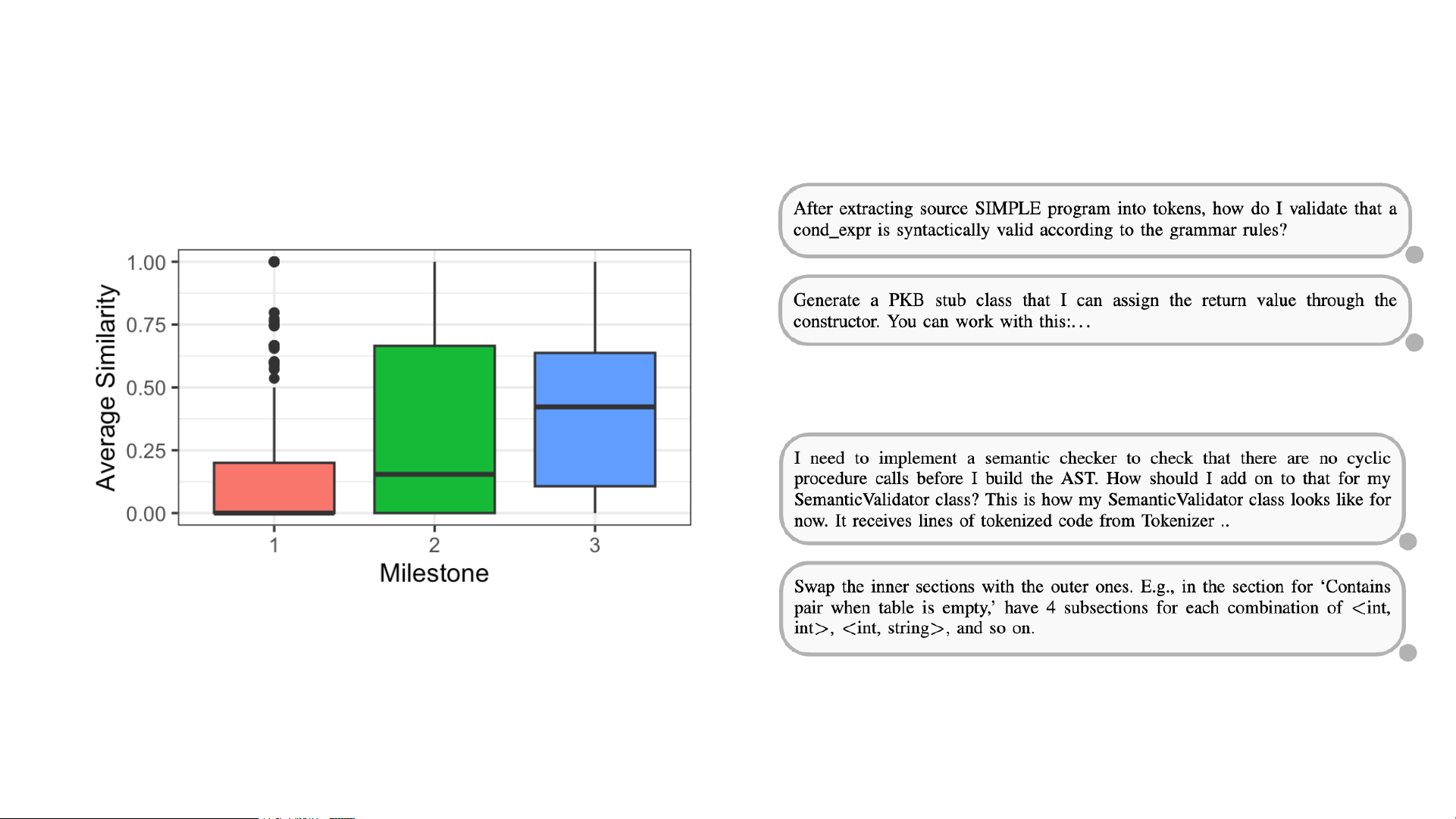

B.2 Code Similarity Trends over Milestones: Result

Prompts by teams 5 and 13, during MS1

Figure 5: Similarity of generated and integrated code across milestones

Prompts by teams 5 and 13, during MS2 & MS3

Students’ AI usage evolved from exploration to seamless integration, with increasing similarity scores across milestones. 20

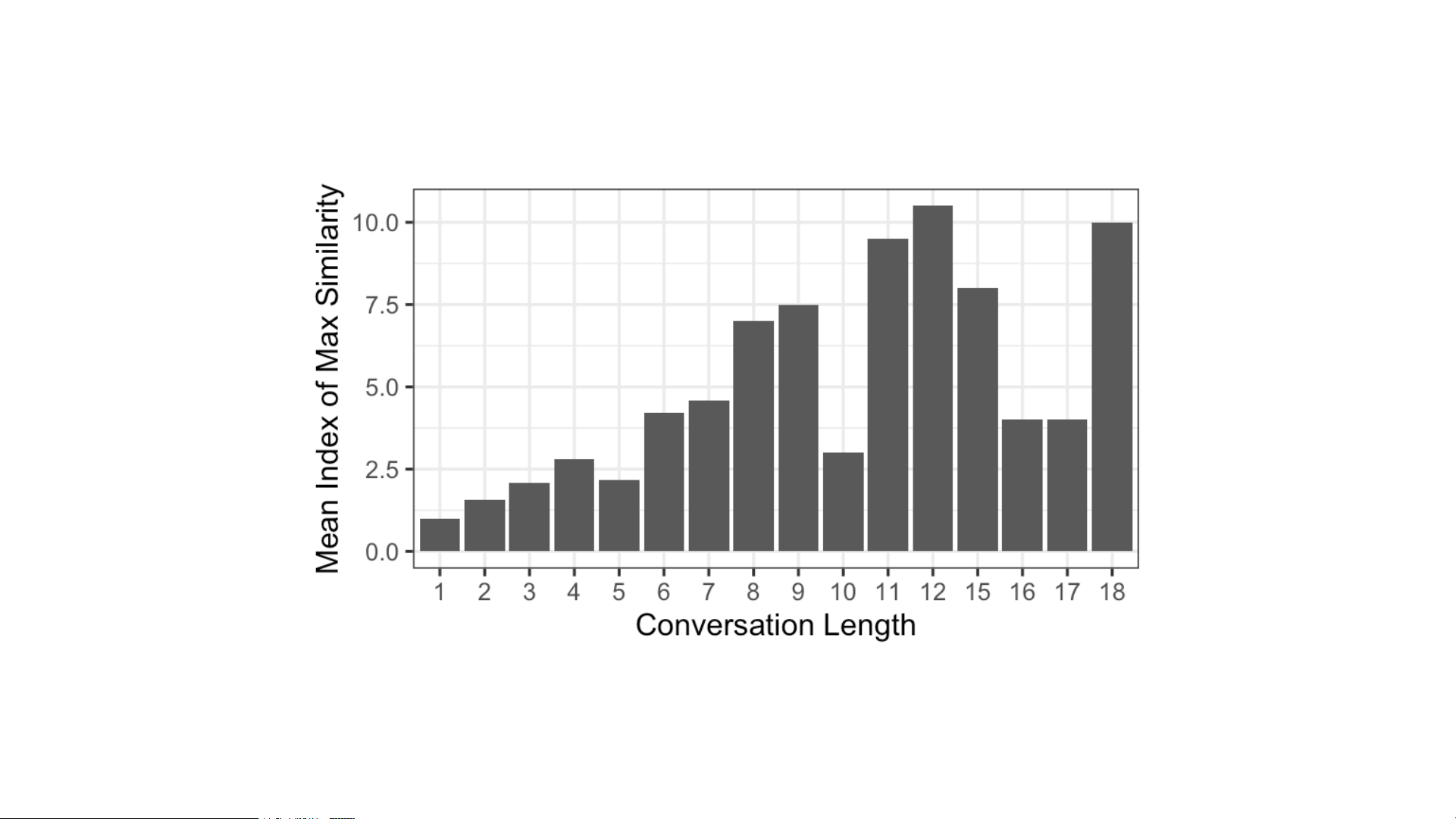

B.3 In-depth Analysis of Conversations

Figure 6: Mean Index of the Generated Code Most Similar to the Repository Code for Conversations of Different Lengths

Students actively refine ChatGPT-generated code through multiple exchanges before integrating it into their projects. 21