Preview text:

VIETNAM NATIONAL UNIVERSITY – HOCHIMINH CITY INTERNATIONAL

UNIVERSITY SCHOOL OF ELECTRICAL ENGINEERING

DEVELOP A DEEPFAKE APPLICATION TO EXPRESS

EMOTIONS OF IMAGE THROUGH VOICE CONTROL BY PHẠM HUỲNH ĐỨC EEACIU19034

A SENIOR PROJECT SUBMITTED TO THE SCHOOL OF ELECTRICAL ENGINEERING

IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF

BACHELOR OF ELECTRICAL ENGINEERING HO CHI MINH CITY, VIET NAM JANUARY, 2024

DEVELOP A DEEPFAKE APPLICATION TO EXPRESS EMOTIONS

OF IMAGE THROUGH VOICE CONTROL BY PHẠM HUỲNH ĐỨC EEACIU19034

Under the guidance and approval of the committee, and approved by its members, this thesis

has been accepted in partial fulfillment of the requirements for the degree. Approved:

______________________________________ _ Chairperson

______________________________________ _ Committee member

______________________________________ _ Committee member

______________________________________ _ Committee member i

______________________________________ _ Committee member HONESTY DECLARATION

My name is Pham Huynh Duc, student ID: EEACIU19034. I would like to declare that, apart

from the acknowledged references, this senior project either does not use languages, ideas, or other

original materials from anyone; or has not been previously submitted to any other educational and

research programs or institutions. I fully understand that any writings in this senior report

contradicted to the above statement will automatically lead to the rejection from the EE program

at the International University – Vietnam National University Ho Chi Minh City.

@Pham Huynh Duc, 25/01/2024. ii TURNITIN DECLARATION

Name of Student: Phạm Huỳnh Đức

Student ID: EEACIU19034 Date: 25/01/2024 Student Name Advisor Name Pham Huynh Duc Nguyen Ngoc Truong Minh iii ACKNOWLEGMENT

It is with a deep gratitude and appreciation that I acknowledge the professional guidance of

Dr. Nguyen Ngoc Truong Minh, Head of Department of Telecommunications, School of Electrical

Engineering. His constant encouragement and support helped me to achieve my goal.

I would also like to express my gratitude to professors, staffs at International University

(VNU-HCMC) and School of Electrical Engineering. Their invaluable knowledge and guidance

supports me and provide me with the necessary skills and experience to complete my senior project. iv TABLE OF CONTENTS

HONESTY DECLARATION .......................................................................................................... i

TURNITIN DECLARATION ....................................................................................................... iii

ACKNOWLEGMENT .................................................................................................................. iv

TABLE OF CONTENTS ................................................................................................................. v

LIST OF TABLES ........................................................................................................................ vii

LIST OF FIGURES ..................................................................................................................... viii

ABBREVIATIONS AND NOTATIONS ......................................................................................... x

ABSTRACT .................................................................................................................................. xi

CHAPTER I INTRODUCTION ..................................................................................................... 1

1.1 Background ........................................................................................................................... 1

1.2 Goal and Objectives .............................................................................................................. 2

1.3 Structure of the report ........................................................................................................... 2

CHAPTER II DESIGN SPECIFICATIONS AND STANDARDS ................................................. 4

2.1 Design Specifications and Standards .................................................................................... 4

2.2 Realistic Contraints ............................................................................................................... 4

CHAPTER III PROJECT MANAGEMENT .................................................................................. 4

3.1 Budget and Cost Management Plan ...................................................................................... 5

3.2 Project Schedule.................................................................................................................... 5

3.3 Resources planning ............................................................................................................... 5

CHAPTER IV LITERATURE REVIEW ........................................................................................ 6 v

4.1 Facial Recognition and Attendance System using dlib and face_recognition libraries ........ 7

4.2 Generative Adversarial Networks: Introduction and Outlook .............................................. 7

4.3 GANimation: automatically-aware Facial Animation from a single image ....................... 11

4.4 A Voice Assistance Based News Web Application by using AI Tools ................................ 14

CHAPTER V METHODOLOGY ................................................................................................. 16

5.1 Training the model .............................................................................................................. 19

5.2 Processing fake video ......................................................................................................... 24

5.3 Using speech regconition package in python to analyze speech information .................... 26

CHAPTER VI EXPECTED RESULT ........................................................................................... 29

6.1 Training the model .............................................................................................................. 29

6.2 Processing fake video ......................................................................................................... 31

6.3 Using speech regconition package in python the analyze speech information ................... 34

CHAPTER VII CONCLUSION AND FUTURE WORK ............................................................. 37

7.1 Conclusion .......................................................................................................................... 37

7.2 Future work ......................................................................................................................... 39

REFERENCES .............................................................................................................................. 40 vi LIST OF TABLES

Table 1: Project sofware, tool and model specifications ....................................................... 4

Table 2: Realistic contraints of the project ............................................................................ 4

Table 3: Project schedule ....................................................................................................... 5 vii LIST OF FIGURES

Figure 1: Basic structure of Generative Adversial Network model [4] ................................. 8

Figure 2: Some of developed GAN structure [4] .................................................................11

Figure 3: Overview of approach to generate photo-realistic conditioned images [5]. ....... 12

Figure 4: Attention base generator [5] ................................................................................. 14

Figure 5: Flowchart 1, Overall Concept of the project ........................................................ 16

Figure 6: Flowchart 2, Video and speech processing .......................................................... 17

Figure 7: Flowchart 3, GAN algorithm in generate fake image .......................................... 18

Figure 8: Preparation data set celeba ................................................................................... 20

Figure 9: Face regconition with a rectangular boundary ..................................................... 20

Figure 10: comparision of individual step in this section .................................................... 21

Figure 11: Data processing and preparing for training ........................................................ 22

Figure 12: Data after processing .......................................................................................... 23

Figure 13: Trainning process in colab ................................................................................. 23

Figure 14: Au vector parameters extracted by Open Face Lab [11] .................................... 24

Figure 15: Creating a video by ffmp ................................................................................... 26 viii

Figure 16: Result of a dataset after operating ...................................................................... 29

Figure 17: Au vecter paramer of trainning image ............................................................... 30

Figure 18: data set after preparing and obtaining AU vector .............................................. 30

Figure 19: result pretrained model after trainning ............................................................... 31

Figure 20: User’s input imaga after processing ................................................................... 31

Figure 21: a set of fakes image after applying the model .................................................... 32

Figure 22: comparision the output result for each step .......................................................

33 Figure 23: a set of full context

image .................................................................................. 33

Figure 24: output of taking speech data .............................................................................. 34

Figure 25: Word splited from input sensetence ................................................................... 35

Figure 26: Emption function called(1) ................................................................................ 35

Figure 27: Emption function called(2) ................................................................................ 36

Figure 28: apply facial landmark to change face gesture .................................................... 37

Figure 29: Apply first order motion GAN to generate fake imagfe .................................... 38 ix

ABBREVIATIONS AND NOTATIONS API:

Application Programming Interface AU: Action Unit

CNN: Convolutional Neural Network CSS: Cascading Style Sheets EC: Emotion Control FAS: Facial Action System Generative Adversial Network GAN: x ABSTRACT

In our digital age, where we seek to make images more lifelike and filled with emotions, this

project introduces a user-friendly application. The application utilizes the deepfake technology to

create images that are both realistic and emotionally expressive.

The core innovation of this tender lies in its ability to enable users to input an image of a

person through voice commands, control the emotion of the image of the face. Whether conveying

happiness, sadness, excitement, or any other emotion, users can now authentically express their

feelings through digital avatars. The project’s technical foundation bases on advanced deepfake

algorithms, voice recognition, and real-time image processing. We explore the intricacies of these

technologies, ensuring seamless integration for users, making them more engaging and emotionally resonant.

By combining deep learning and voice control, we empower users to add genuine emotions

to images, offering a new dimension to visual expression. Also, we delve into the technical aspects

of deepfake technology, voice recognition, and real-time image manipulation to ensure an intuitive

and engaging experience. In general, our project present the application of technology to convey

emotions through images, making digital expression more relatable and immersive. xi lOMoARcPSD|47206417 CHAPTER I INTRODUCTION

In this section, we will discuss about the background, purpose of this application. Through

this project, we will also disscuss about the goals and objectives. 1.1 Background

Emotions play a critical role in the evolution of consciousness and the operations of all

mental processes. Types of emotion relate differentially to types or levels of consciousness [1].

Expressing them through images serves as a potent way to convey feelings. Altering the emotion

in a single portrait image holds immense potential, offering new avenues for artistic expression,

storytelling, and personalization, creating a more realistic and interactive visual experience. The

idea of developing an application that allows users to interact with portrait images through voice

commands to customize emotions is both captivating and innovative. This concept aims to provide

users with a sense of virtually attending a video call with the people depicted in the images. Thanks

to advancements in computing power and the evolution of deep learning in computer vision,

Generative Adversarial Networks (GANs) were introduced in 2014 [2]. This breakthrough paved

the way for creating human-machine interactions with real images, making the concept of

developing an application feasible for various platforms such as Windows, Web, and Mobile. In

essence, the primary objective of this project is to design and develop an application that facilitates

human-machine interaction, allowing users to modify emotions in portrait images using voice

commands. This application aims to harness the power of GANs and deep learning to create a

seamless and engaging user experience. In conclusion, our project aim to contribute to the evolving

landscape of human-machine interaction, blending artistic expression, storytelling, and

personalization through a user-friendly application. The potential for realistic visual interactions

adds an exciting aspect to how we convey and experience emotions in digital imagery. 1 lOMoARcPSD|47206417

1.2 Goal and Objectives 1.2.1. Goal

The main goal of this project is to develop an application that can interact with user in realtime by speech.

Through the speech, the application will display the emotion of the input image face

respective to the data of the speech input from user 1.2.2. Objectives

1.2.2.1. Training Generative adverial networks model - Data preparation

- Obtaining action unit vector for each training image - Training process

1.2.2.2. Build a Deepfake video - Extracting input face image

- Processing the input face image by applying pretrained model

- Creating a deepfake video by the result image

1.2.2.3. Develop a speech module

- Take the speech data from users

- Built emtion function for relevant words received

- Apply the function to display a deepfake video

1.3 Structure of the report

The report is organized as follows: • Chapter 1: Introduction 2 lOMoARcPSD|47206417

• Chapter 2: Design specification and standards

• Chapter 3: Project management

• Chapter 4: Literature review • Chapter 5: Methodology

• Chapter 6: Expected results

• Chapter 7: Conclusion and future work 3 lOMoARcPSD|47206417

CHAPTER II DESIGN SPECIFICATIONS AND STANDARDS

2.1 Design Specifications and Standards

Table 1: Project sofware, tool and model specifications Software Specifications Program language Python, C++ IDE Enviroment

Google Colab and Visual Studio Code Model Hog, MTCnn, Celeba, EC-GAN

Opencv, Numpy, PyTorch, voice regconition, Packages Used

skimage, deepface lab, demo, matplot

2.2 Realistic Contraints

Table 2: Realistic contraints of the project

Creating a entertainment application for user Entertainment to interact in realtime Working only on software Environmental

Using Google Colab and public data sources Healh and Safety

CHAPTER III PROJECT MANAGEMENT

The project management plan is a single, formal, dynamic document that outlines how the

project is to be managed, executed, and controlled. It contains the overall project governance and

related management plans and procedures, timelines, and the methods and accountabilities for 4 lOMoARcPSD|47206417

planning, monitoring, and controlling the project as it progresses. This document evolves with the

project and will be updated to reflect any relevant changes throughout project execution. This

document should ensure there are no surprises through execution on how the project is managed or decisions are made.

3.1 Budget and Cost Management Plan

This project is working on Google Colab with a large GPU usage, it costs USD 10.5 for 100

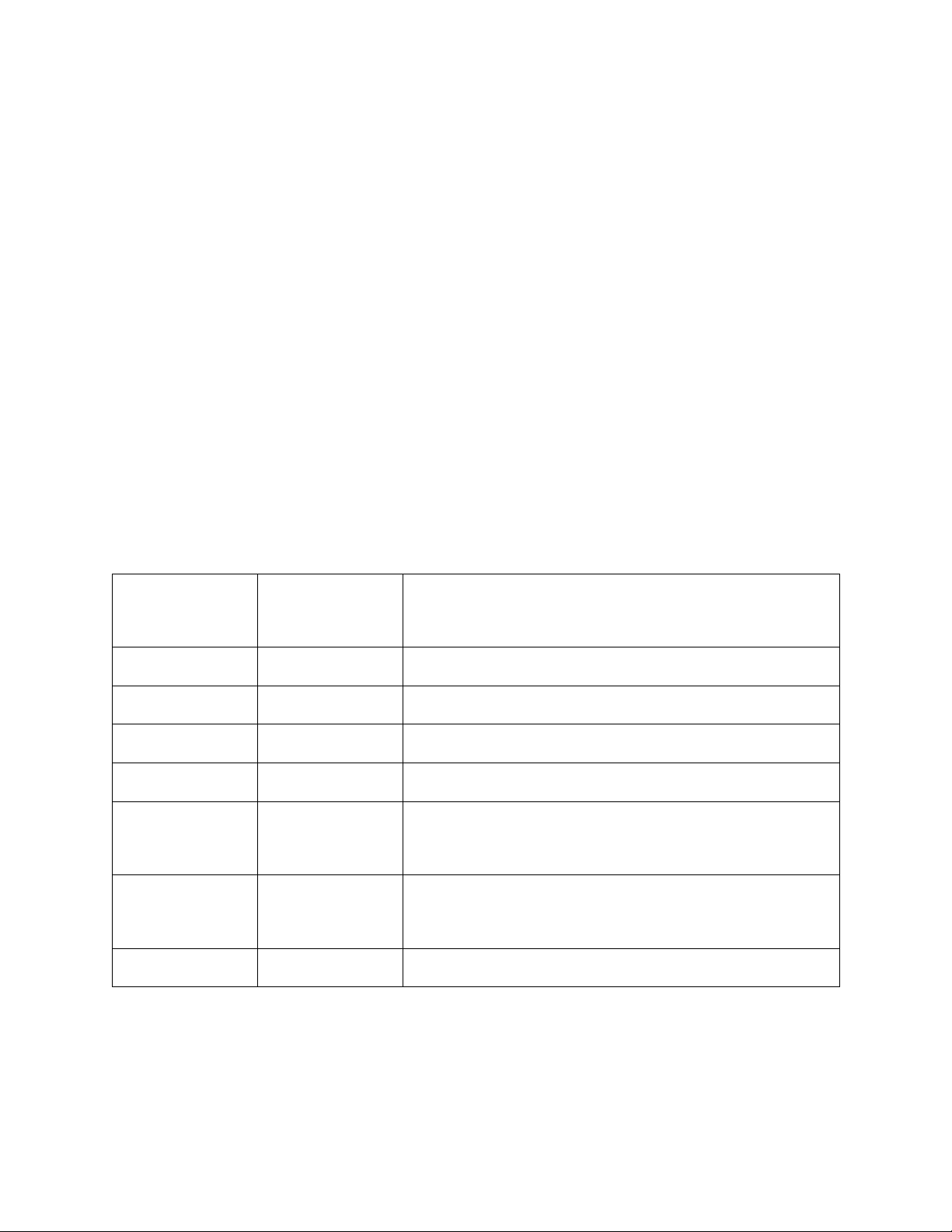

compute unit in Colab. The total compute unit through this project is about 180. 3.2 Project Schedule

This section will be based on my initial objectives. This will provide us an overall

demonstation for the schedule of each task.

Table 3: Project schedule Start time End time Task 02/10/2023 28/10/2023

Choosing the topic and submitting the abstract 02/10/2023 15/11/2023

Theoritical research for relative topic 15/11/2023 23/11/2023 Preparing data 23/11/2023 15/12/2023 Training the GAN model 15/12/2023 28/12/2023

Apply the Pretrained GAN model to generate fake image and video 28/12/2023 6/1/2024

Develop a speech regconition module and build

function to display emotion video 6/1/2024 02/2024

Writing the report and develop a web application 3.3 Resources planning

We will give the information of the relevant person in this senior project. 1. Mr. Pham Huynh Duc 5 lOMoARcPSD|47206417 • Role: Implementer

• Task: Develop a deepfake application generate fake image that is controlled by voice.

2. Dr. Nguyen Ngoc Truong Minh • Role: Advisor

• Task: Give advice on the topic and idea for each project's task, and give feedback on the

performance of the project and the report.

CHAPTER IV LITERATURE REVIEW

In this chapter, we will provide the related work that I apply the materials in this to develop

my project. Our project draws inspiration from four distinct research studies. The first of these is

titled “Facial Recognition and Attendance System using dlib and face_recognition libraries” [3],

which was instrumental in the detection of facial objects within digital images. This research

provided valuable insights and methodologies that have been integrated into the senior project for

accurate and efficient facial recognition. The second research is “Generative Adversial Networks:

Introduction and Outlook” [4], which is help us deep into the world of deep learning in generative

AI… Building upon this foundation, the third research, “GANimation: Anatomically-aware Facial

Animation from a Single Image” [5], has been instrumental in shaping the anatomically-aware

facial animation aspects of our project. It has significantly contributed to the development of a

continuous facial expresion. And the final is “Voice Assistance Based News Web Application by

using AI Tools” [6] provides us the guildline for building a web application that can interact with the users in realtime. 6