Preview text:

Introduction

We are living in the era of science and technology, where everything - especially artificial

intelligence - is developing rapidly. Human civilization is also advancing, leading to an

increasing demand for human life, education, work, and other aspects. In addition, the 25%

annual increase in the number of people participating in the field of science and technology

engineering has rapidly increased the number of scientists; as a result, the amount of scientific

literature and research products has increased significantly. All of this has created a huge and

constantly growing amount of information, leading to an information explosion.

To manage the ever-increasing and complex data, software and digital products have been

developed. And ElastichSearch - a powerful search application - has been chosen by us as the

topic of our report, as a contribution to expanding access to knowledge to serve the urgency of the above problem. Acknowledgments

Thank you to Mr. Vu Tien Dung for providing us with the basic knowledge, foundation,

guidance on how to conduct the report, and directing our scientific thinking and work. All of

your teachings are important knowledge that helps us complete this essay.

Thanks to the whole group for always trying hard and being responsible for the work.

Due to the limitations of our knowledge and theoretical abilities, there are still many

shortcomings and limitations in our work. We sincerely hope that you can provide guidance

and contribute to making this report more complete. Thank you very much! Bigdata

1. The concept of Big Data

Big Data is one of the terms commonly used to represent a collection of large and complex

data that traditional processing tools and applications cannot manage, process and collect.

These large data sets can often include structured data, unstructured data, and semi-structured

data. So each set will be a little different.

Based on reality, large amounts of data and large projects will be in the exabyte range. Big

Data is highly appreciated for possessing the following 3 characteristics:

The data blocks are extremely large.

Possessing many other diverse types of data.

There is a velocity at which data needs to be processed and analyzed.

This data will be created into larger data warehouses and can come from sources including:

websites, social media, desktop applications, device applications. , scientific and technical

experiments and even in other internet-connected network devices. 2

2. The origin of Big Data

According to many users, Big Data is considered one of the terms that started in the 1960s

and 1970s. This was the time when the world of data only started from the first data centers to

combine with it itself. is the development of SQL databases.

In 1984, the DBC 1012 parallel data processing system was born by Teradata Corporation.

This is one of the first systems capable of analyzing and storing 1 terabyte of data. By 2017,

there were dozens of databases located on the Teradata system with high capacity resources of up to petabytes.

Among them, the largest amount of data has exceeded the threshold of 50 petabytes. In 2005,

when people began to realize the number of users created through Youtube, Facebook and

other online services was limitless. same big.

During this time, NoSQL is also increasingly used and supports the development of

frameworks because it is necessary to promote the development of Big Data. According to

users, these frameworks support Big Data, making it easier to store and operate.

At present, the volume of Big Data is gradually increasing more rapidly, so users are

gradually creating an extremely large amount of data. However, this data is not only for

humans but is also created by machines. In addition, the advent of IoT with many other

devices makes it easier for users to use as well as improves product performance.

3. Characteristics of Big Data

Here are some outstanding features of Big Data: 1. Volume:

Combined with big data to process low-fat and unstructured data. This data is of unknown

value such as: providing Twitter data, performing clicks on websites or using applications for mobile devices.

For some other organizations, this is considered tens of terabytes of data or hundreds of petabytes. 2. Velocity:

This is considered how fast the data source can receive and can be acted upon. According

to experts, the highest speed of the data source is usually directly to memory compared to

writing to disk. Some other smart products feature support for the internet or some real-

time operations and are more closely suited to the requirements for evaluation and other real-time operations. 3. Variety:

It possesses categories that can refer to more than other available data types. Traditional

data types are different and often have a more consistent and compact structure in some

databases of other types of technology. These types of data are unstructured or more

commonly semi-structured to require additional processing to be able to derive meaning

from other supporting metadata.

These large data warehouses are created from data and this data can come from a number of

sources such as applications right on mobile devices, some applications for desktop

computers, social networks. , website,... There are also scientific experiments, some other

sensors and other devices inside the internet (IoT). 3

This Big Data, if accompanied with relevant components, will allow organizations to put data

into practical use and solve business-related problems. Problems that Big Data can solve include:

Perform analysis as well as apply it to other data.

IT infrastructure to support Big Data.

The technologies needed for Big Data projects include other related skill sets.

Real cases related to Big Data.

When performing data analysis, the value brought from organizations is extremely large and

if not analyzed, it is only data of limited use in the business field. When analyzing big data,

businesses will gain a number of benefits related to customer service.

From there, it brings more efficiency to the business as well as increases competitiveness and revenue for the company.

4. The role of big data in business

Targeting customers correctly: Big Data's data is collected from many different sources,

including social networks.. It is one of the channels frequently used by users. Therefore, Big

Data analysis businesses can understand customer behavior, preferences, and needs while

classifying and selecting the right customers suitable for the business's products and services.

Security prevention and risk mitigation: Big Data is used by businesses as a tool to probe,

prevent and detect threats, risks, theft of confidential information, and system intrusions.

Price optimization: Pricing any product is an important thing as well as a challenge for

businesses because the company needs to carefully research customer needs and price levels.

price of that product from competitors.

Quantify and optimize personal performance: Due to the emergence of smart mobile

devices such as laptops, tablets, or smartphones, collecting information and personal data has

become easier than ever. run out of. Collecting personal data from users will help businesses

have a clear view of the trends and needs of each customer. This will help businesses map out

future development directions and strategies

Capture financial transactions: Financial interfaces on websites or e-commerce apps are

increasing due to the strong development of e-commerce worldwide. Therefore, Big Data

algorithms are used by businesses to suggest and make transaction decisions for customers.

V. Operational process of Big Data

1. Building a Big Data strategy

Big data strategy is considered a plan designed to help you monitor and improve data

collection, storage, management, sharing and usage for your business. When developing a Big

Data strategy, it is important to consider the current and future goals of businesses.

2. Identify Big data sources

Data from social networks: Big Data in the form of images, videos, audio and text will be

very useful for marketing and sales functions. This data is often unstructured or semi- structured.

Published available data: Is information and data that has been publicly announced

Live streaming data: Data from the Internet of Things and connected devices transmits into

information technology systems from smart devices.

Other: Some data sources come from other sources

3. Access, manage and store Big Data 4

Modern computer systems provide the speed, power and flexibility needed to quickly access

data. Along with reliable access, companies also need methods for data integration, quality

assurance, and the ability to manage and store data for analysis. 4. Conduct data analysis

With high-performance technologies such as in-memory analytics or grid computing,

businesses will choose to use all of their big data for analysis. Another approach is to

predetermine what data is relevant before analysis. Big data analytics is how companies gain value and insights from data.

5. Make decisions based on data

Reliable, well-managed data leads to informed judgments and decisions. To stay competitive,

businesses need to grasp the full value of big data and data-driven operations to make

decisions based on well-proven data. 5 6

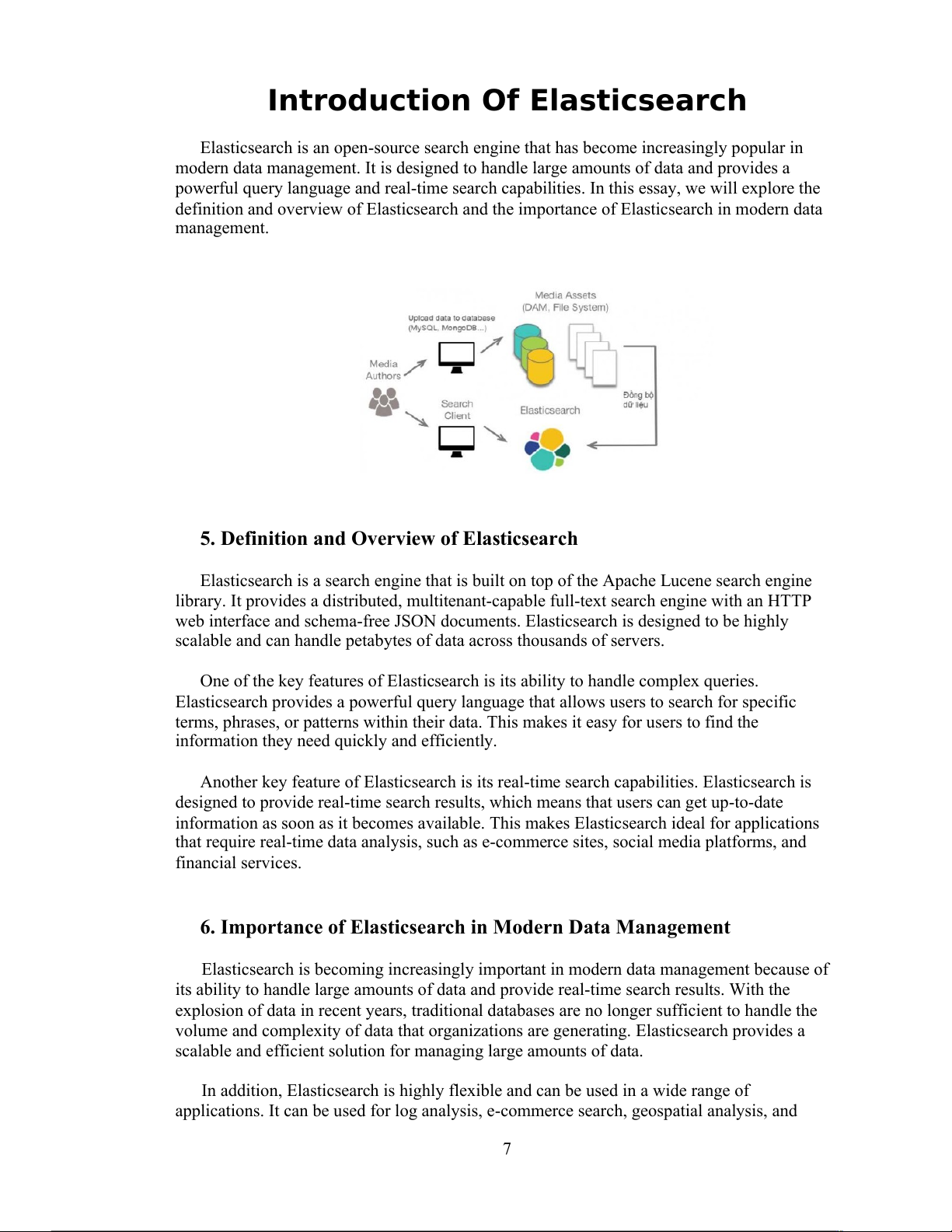

Introduction Of Elasticsearch

Elasticsearch is an open-source search engine that has become increasingly popular in

modern data management. It is designed to handle large amounts of data and provides a

powerful query language and real-time search capabilities. In this essay, we will explore the

definition and overview of Elasticsearch and the importance of Elasticsearch in modern data management.

5. Definition and Overview of Elasticsearch

Elasticsearch is a search engine that is built on top of the Apache Lucene search engine

library. It provides a distributed, multitenant-capable full-text search engine with an HTTP

web interface and schema-free JSON documents. Elasticsearch is designed to be highly

scalable and can handle petabytes of data across thousands of servers.

One of the key features of Elasticsearch is its ability to handle complex queries.

Elasticsearch provides a powerful query language that allows users to search for specific

terms, phrases, or patterns within their data. This makes it easy for users to find the

information they need quickly and efficiently.

Another key feature of Elasticsearch is its real-time search capabilities. Elasticsearch is

designed to provide real-time search results, which means that users can get up-to-date

information as soon as it becomes available. This makes Elasticsearch ideal for applications

that require real-time data analysis, such as e-commerce sites, social media platforms, and financial services.

6. Importance of Elasticsearch in Modern Data Management

Elasticsearch is becoming increasingly important in modern data management because of

its ability to handle large amounts of data and provide real-time search results. With the

explosion of data in recent years, traditional databases are no longer sufficient to handle the

volume and complexity of data that organizations are generating. Elasticsearch provides a

scalable and efficient solution for managing large amounts of data.

In addition, Elasticsearch is highly flexible and can be used in a wide range of

applications. It can be used for log analysis, e-commerce search, geospatial analysis, and 7

more. This versatility makes Elasticsearch an attractive option for organizations that need a

search engine that can handle a variety of use cases. 7. Conclusion

In conclusion, Elasticsearch is an open-source search engine that is designed to handle

large amounts of data. It provides a powerful query language and real-time search capabilities,

making it ideal for modern data management. Its scalability, efficiency, and flexibility make it

an attractive option for organizations that need to manage large amounts of data across

multiple servers. As data continues to grow in volume and complexity, Elasticsearch is likely

to become even more important in modern data management.

Key features of elasticsearch

Several outstanding features of Elasticsearch mark its strong influence on data processing:

1. Full-text search capabilities

- Full-text search is a technique for searching information in a database, allowing you to

search for words or phrases throughout the content of a document. This technique makes

finding information easier and faster. Simply put, full text search (FTS for short) is the most

natural way to search for information, just like Google, we just need to type in keywords and press "enter" to get results.

- Specifically, Elasticsearch supports searching and evaluating the relevance of text based on

content, using the TF-IDF (Term Frequency - Inverse Document Frequency) technique to

evaluate the importance of words in the text. In addition, the key factor that determines the

difference between Full-text search technique (in Elasticsearch in particular and other search

engines in general) and other common search techniques is "Inverted Index".

2. Distributed architecture and scalability

- Elasticsearch uses a distributed architecture that allows large volumes of data to be stored,

queried, and processed across multiple nodes in a cluster. At the same time, data is indexed

and stored in shards, distributed across nodes to simplify maintenance, improve scalability and fault tolerance.

- Scalability: As the database grows, it becomes difficult to search. But Elasticsearch scales

as your database grows, so search speeds don't slow down. Elasticsearch allows for high-scale

expansions up to thousands of servers and holds Petabytes (PB) of data. Users do not need to

manage the complexity of distributed design because features are implemented automatically.

3. Near real-time data processing

- Elasticsearch has near real-time search, which means that document changes are not visible

to search immediately, but will become visible within one second. Compared to other

features, near real-time search capability is undoubtedly one of the most important features in Elasticsearch

- The critical point that determines near real-time data processing feature is all shards is

refreshed automatically once every second by . Besides, ElasticSearch has fault tolerance and

high resiliency. Its clusters have master and replica shards to provide failover in case of node 8

going down. When a primary shard goes down, the replica takes its place. When a node leaves

the cluster for whatever reason, intentional or otherwise, the master node reacts by replacing

the node with a replica and rebalancing the shards. These actions are intended to protect the

cluster against data loss by ensuring that every shard is fully replicated as soon as possible.

In short, if a shard fails, its replica will be used instead. Therefore, ElasticSearch is very

effective when working with big data, with hundreds or even thousands of records in near realtime.

**However, it is not always necessary to use Elasticsearch. If your project doesn't require fast

search, complex data analysis, or big data management, there may be other solutions that are

more suitable. Tool selection depends on the specific requirements of the project and available resources.

4. Support for various data types and formats

- Elasticsearch supports a variety of field data types, such as:

Textual: Textual data, such as title, content, summary, comments, reviews, etc.

Digital: Numerical data, such as price, score, time, etc.

Geospatial: Geographic data, such as coordinates, addresses, distances, etc.

Structured: Structured data, such as tables, charts, lists, etc.

Unstructured: Unstructured data, such as images, videos, sounds, etc.

- Elasticsearch operates as a cloud server, response format is JSON and is written in Java so it

can operate on many different platforms, and is interactive and supports many programming

languages such as Java, JavaScript, Node.js , Go, .NET (C#), PHP, Perl, Python, Ruby.

(Therefore, the security is not high)

- Elasticsearch uses JSON to process requests and responses, so you need to convert other

data to JSON format before storing or querying. This is also a disadvantage of ES compared

to other search engines also built on Lucene such as Apache Solr (supports JSON, CSV, XML).

Architecture of Elasticsearch

Elasticsearch is a distributed search and analytics engine that is widely used for indexing,

searching, and analyzing large volumes of data. Its architecture is designed to be highly

scalable and fault-tolerant. Here's an overview of the key components of Elasticsearch's architecture:

1. Nodes, Clusters, and Shards: 1.1. Node

- A node is a fundamental unit within an Elasticsearch cluster.

- It can be a physical or virtual machine that runs Elasticsearch.

- Each node can have different roles, such as data node, master node, or coordinating node, depending on its configuration

- Data Node: Stores and manages data, executing data-related operations like indexing and searching

- Master Node: Manages cluster-wide operations like creating or deleting indices, shard

allocation, and maintaining the cluster state 9

- Coordinating Node: Routes client requests to the appropriate data nodes, providing a more

efficient way to interact with the cluster

Certainly, let's explore the concept of nodes in Elasticsearch with a concrete example:

Example of Nodes in Elasticsearch:

Suppose you are setting up an Elasticsearch cluster to store and search log data for a web

application. In this example, you have three nodes in your Elasticsearch cluster. a. Node 1: - Role: Data Node

- Hardware: Physical server with high storage capacity

- Responsibilities: Stores and manages the log data, handles indexing and search operations.

- Configuration: It is configured as a dedicated data node to ensure optimal storage and

search performance for log data. b. Node 2:

- Role: Master-eligible Node and Data Node

- Hardware: Virtual machine with moderate resources

- Responsibilities: Assists with cluster management tasks such as index creation and shard

allocation. It also stores and manages log data like Node 1.

- Configuration: This node is configured to be both a data node and a master-eligible node

to help manage the cluster while also participating in data storage. c. Node 3: - Role: Coordinating Node

- Hardware: Virtual machine with moderate resources

- Responsibilities: Primarily handles incoming client requests, routing them to the

appropriate data nodes. It doesn't store data but improves the efficiency of search operations.

- Configuration: It is configured as a coordinating node to reduce the load on data nodes and

facilitate efficient query routing.

All three nodes share a common cluster name, for instance, "web-logs-cluster," which means

they form a single Elasticsearch cluster. This cluster enables you to distribute and search your

web application log data efficiently.

Data nodes (Node 1 and Node 2) handle the actual storage and retrieval of log data, while the

coordinating node (Node 3) ensures that client requests are efficiently routed to the

appropriate data nodes. In the case of any cluster management tasks, such as creating new

indices or managing shard allocation, the master-eligible node (Node 2) assists with these operations.

This example illustrates how Elasticsearch's distributed architecture uses nodes with different

roles to work together in a cluster, allowing for scalability, fault tolerance, and efficient data

management and search capabilities. 1.2. Cluster:

In Elasticsearch, a cluster is a fundamental component of its architecture. It represents a

group of interconnected nodes that work together to store and manage data, ensuring high 10

availability, scalability, and fault tolerance. Here's a detailed explanation of a cluster in Elasticsearch: a. Cluster Name:

- Each Elasticsearch cluster is identified by a unique cluster name, which serves as a human- readable identifier.

- All nodes within the same Elasticsearch cluster must share the same cluster name. b. Interconnected Nodes:

- A cluster consists of multiple nodes, which are individual instances of Elasticsearch

running on physical or virtual machines.

- These nodes communicate and coordinate with each other to ensure the consistency of data and cluster state. c. Shared Configuration:

- Nodes within the same cluster share a common configuration, including cluster settings,

index settings, and data mappings.

- This shared configuration ensures that all nodes are aware of the same data structures and rules. d.Data Distribution:

- When you index data into Elasticsearch, it is divided into smaller units called shards.

These shards are distributed across the nodes in the cluster.

- Data distribution ensures that data is stored in a distributed and redundant manner,

enhancing fault tolerance and query performance. e. Cluster State:

- The cluster maintains a cluster state, which records information about the cluster's health,

shard allocation, and node statuses.

- The cluster state is updated dynamically as nodes join or leave the cluster, and as data is indexed, searched, or deleted. f. Master Node:

- Within a cluster, one node is elected as the master node. The master node is responsible

for managing cluster-wide operations, such as creating or deleting indices, managing shard

allocation, and maintaining the cluster state.

- If the master node fails, another node is automatically elected as the new master to ensure cluster continuity. g.Fault Tolerance:

- Elasticsearch clusters are designed for high availability and fault tolerance. Data is

replicated by creating replica shards for each primary shard, allowing for data recovery in the event of node failures.

- Data distribution across nodes further enhances fault tolerance. h. Scalability:

- Elasticsearch clusters are scalable and can handle growing data volumes and query loads.

You can add more nodes to a cluster to increase its capacity and performance.

- Elasticsearch's distributed nature enables it to scale horizontally as new nodes are added.

i. Search and Query Distribution: 11

- When you send a query to a cluster, coordinating nodes route the query to the relevant data

nodes, distributing the search workload across multiple nodes for improved query performance.

Certainly, let's provide a concrete example of a cluster in Elasticsearch to illustrate how it works:

Example of a Cluster in Elasticsearch:

Imagine you are managing an e-commerce website, and you decide to implement

Elasticsearch to power the search functionality for your online store. You set up an

Elasticsearch cluster to handle the product data, and it consists of multiple nodes working together.

Cluster Name: Your Elasticsearch cluster is named "ecommerce-products-cluster." All

nodes in the cluster must use this common cluster name. 1.2.1. Nodes: a. Node 1:

- Role: Data Node and Master-eligible Node

- Hardware: Physical server with high storage capacity

- Responsibilities: Stores product data, manages indexing and searching, and assists with cluster management tasks.

- Configuration: This node is both a data node and a master-eligible node to efficiently

manage both data and cluster operations. b. Node 2: - Role: Data Node

- Hardware: Virtual machine with moderate resources

- Responsibilities: Stores product data and handles indexing and searching operations.

- Configuration: This node is focused on data storage and retrieval. c. Node 3: - Role: Coordinating Node

- Hardware: Virtual machine with moderate resources

- Responsibilities: Serves as a coordinating node to efficiently route client search requests to the appropriate data nodes.

- Configuration: It doesn't store data but optimizes query routing. Data Distribution:

- Product data, including product descriptions, prices, and availability, is divided into smaller

units called shards. You have defined an index called "products" with 5 primary shards and 1

replica for each primary shard. This means you have 5 primary shards and 5 replica shards for the "products" index.

- The shards are distributed across the data nodes, ensuring data redundancy and improving query performance. Cluster State:

- The cluster maintains a cluster state that tracks the health of the cluster, shard allocation, and

node statuses. It continuously updates as nodes join or leave the cluster, and as data is indexed or queried. 12 Master Node:

- Among the nodes, one of them is automatically elected as the master node. In this example,

Node 1 is elected as the master node. It is responsible for managing cluster-wide operations,

such as creating new indices and managing shard allocation. Fault Tolerance:

- Data is replicated using replica shards, which provide redundancy. If a data node fails, the

replica shards on other nodes can be promoted to primary, ensuring data availability. Scalability:

- As your e-commerce website grows, you can easily scale your Elasticsearch cluster by

adding more nodes to handle increased data and search loads. Search and Query Distribution:

- When customers search for products on your website, the coordinating node (Node 3)

efficiently routes their queries to the relevant data nodes (Node 1 and Node 2), ensuring quick and accurate search results.

This example demonstrates how Elasticsearch clusters are used to efficiently manage, store,

and search product data for your e-commerce website. Elasticsearch's distributed architecture,

with its cluster, nodes, and shards, ensures scalability, fault tolerance, and high-performance search capabilities.

In summary, an Elasticsearch cluster is a collection of nodes that work together to store,

manage, and search data. Clusters provide high availability, scalability, and fault tolerance,

making Elasticsearch a powerful tool for handling large volumes of data and delivering

efficient search and analytics capabilities. The cluster ensures that data is distributed across

nodes, and the master node oversees cluster-wide operations, while the data nodes handle the storage and retrieval of data. 1.3. Shard:

In Elasticsearch, a shard is a fundamental unit of data storage and distribution. It plays a

central role in the distributed nature of the system, enabling horizontal scalability, data

redundancy, and parallel processing. Here's a detailed explanation of a shard in Elasticsearch: a. Primary Shard:

- When you create an index in Elasticsearch, you specify the number of primary shards.

These primary shards are the main units for storing data.

- Each document you index is initially stored in a primary shard. The number of primary

shards for an index is set when the index is created and cannot be changed later without reindexing the data. b. Replica Shard:

- For each primary shard, Elasticsearch can create one or more replica shards.

- Replica shards serve as copies of the primary data, providing data redundancy and

enhancing query performance. They act as failover instances in case of primary shard failures.

- The number of replica shards can be adjusted after index creation, allowing you to control

the level of redundancy and query performance. 13 c. Data Distribution:

- Shards are distributed across the nodes in an Elasticsearch cluster. This distribution

ensures that data is spread evenly and efficiently across the cluster, allowing for horizontal

scalability and improved performance. d. Parallelism:

- The presence of multiple shards enables parallel processing of data and queries. When you

search for data, queries can be distributed across all available shards, leading to faster search responses. e. Data Resilience:

- The combination of primary and replica shards provides data resilience. If a node or a

primary shard fails, Elasticsearch can promote a replica shard to primary, ensuring that data

remains available and reliable. f. Query Performance:

- Replica shards can be used to balance query loads. Elasticsearch can distribute queries to

both primary and replica shards, improving the query performance by parallelizing the search process. g. Index Creation:

- When you create an index in Elasticsearch, you specify the number of primary shards and

replica shards as part of the index settings. For example, you might create an index with 5

primary shards and 1 replica for each primary shard. h. Shard Allocation:

- Elasticsearch's shard allocation algorithm takes into account factors like shard size, node

health, and data distribution to decide where to place shards for optimal cluster performance and data balance.

In summary, shards in Elasticsearch are the building blocks for storing and distributing data

across the nodes in a cluster. They enable data scalability, redundancy, and parallelism,

ensuring that Elasticsearch can efficiently manage and search large volumes of data while

providing high availability and fault tolerance. The careful allocation and configuration of

primary and replica shards are essential aspects of optimizing Elasticsearch's performance and resilience. Example 1:

Certainly, let's provide a concrete example of how shards work in Elasticsearch:

Example of Shards in Elasticsearch:

Imagine you have a blogging platform where users can write and publish articles. You've decided to

use Elasticsearch to power the search and retrieval of these articles, and you've created an index

named "blog-articles" to store the articles. In this index, you've configured the number of primary

shards and replica shards to illustrate how shards function: 1. Primary Shards:

- You decide to create the "blog-articles" index with 4 primary shards.

- When an article is published, Elasticsearch's indexing process stores it in one of these primary

shards. Each primary shard is responsible for a subset of the data. 2. Replica Shards:

- For each primary shard, you've configured 1 replica shard. 14

- As a result, you have an equal number of replica shards (4) that mirror the data stored in the primary shards. 3. Data Distribution:

- Your Elasticsearch cluster consists of several nodes, and the primary and replica shards are

distributed across these nodes for efficient data storage and redundancy. 4. Indexing an Article:

- When a new article is published on your platform, Elasticsearch's indexing process decides which primary shard to store it in.

- Let's say a new article is stored in the primary shard 2. 5. Querying for Articles:

- When a user searches for articles, their query is distributed to all primary shards in parallel.

- Each primary shard independently searches its portion of the data and returns results. 6. Data Resilience:

- Suppose a node in your cluster experiences a failure, causing the primary shard 2 to become temporarily unavailable.

- Elasticsearch's shard allocation mechanism detects this and promotes one of the replica shards

(e.g., replica shard 2) to be the new primary shard for data continuity. 7. Query Performance:

- Queries from users are also distributed to replica shards.

- This parallelism enhances query performance, as both primary and replica shards participate in serving search requests. 8. Scaling:

- As your blogging platform grows, you can scale your Elasticsearch cluster by adding more nodes.

- Elasticsearch's shard distribution ensures that new data is spread across the new nodes, supporting your platform's scalability.

In this example, the "blog-articles" index is divided into primary and replica shards, allowing

Elasticsearch to distribute data efficiently, achieve fault tolerance, and enhance search performance.

The distribution of shards across the nodes in the cluster ensures that your blogging platform can

handle the growing volume of articles while providing a robust and responsive search experience for users. Example 2:

Certainly, here's an example of shards in Elasticsearch with code snippets illustrating how to

create an index with a specified number of primary and replica shards in Elasticsearch using

the Elasticsearch REST API. In this example, we'll use the `curl` command-line tool to send

HTTP requests to an Elasticsearch cluster. ```bash

# Create an index named "blog-articles" with 4 primary shards and 1 replica per primary shard

curl -X PUT "http://localhost:9200/blog-articles" -H "Content-Type: application/json" -d '{ "settings": {

"number_of_shards": 4, # Number of primary shards

"number_of_replicas": 1 # Number of replica shards } }' ``` In this code example: 15

- We use the `curl` command to make an HTTP PUT request to create an index named "blog- articles" in Elasticsearch.

- Within the request body, we specify the settings for the index, including the number of

primary shards and the number of replica shards. In this case, we have set 4 primary shards

and 1 replica per primary shard.

Once you execute this `curl` command, Elasticsearch will create the "blog-articles" index with

the specified shard settings. You can then proceed to index and search documents within this

index. The primary and replica shards for the index will be automatically allocated across the

nodes in your Elasticsearch cluster.

1.4. Master and Data Nodes: 1.4.1. Master Node:

In Elasticsearch, the "master node" is a critical component of the cluster responsible for

managing cluster-wide operations and maintaining the overall state of the Elasticsearch

cluster. Here's a detailed explanation of the master node in Elasticsearch: Role of the Master Node: a. Cluster Coordination:

- The master node is responsible for cluster coordination and management. It ensures that all

nodes within the cluster are working together cohesively. b. Index and Shard Management:

- It handles the creation, deletion, and management of indices within the cluster. When you

create a new index, the master node decides how to distribute its primary and replica shards across data nodes. c. Shard Allocation:

- The master node oversees shard allocation, determining which data nodes will store

primary and replica shards. This includes optimizing data distribution for load balancing and fault tolerance. d. Cluster State:

- The master node maintains the cluster state, which includes information about the nodes in

the cluster, their roles, the indices, shard allocation, and other critical cluster metadata. e. Election of New Master:

- In the event of a master node failure, the remaining master-eligible nodes initiate a leader

election process to choose a new master node. This ensures that cluster operations continue smoothly. Master-eligible Nodes: a. Master-eligible Nodes:

- Not all nodes in an Elasticsearch cluster are master nodes. Instead, some nodes are

designated as "master-eligible" nodes, meaning they have the potential to be elected as the master node. 16

b. Configuring Master-eligibility:

- In Elasticsearch, you can configure whether a node is master-eligible by adjusting its

configuration in the `elasticsearch.yml` file

c. Odd Number of Master-eligible Nodes:

- It's recommended to have an odd number of master-eligible nodes (usually 3 or 5) to avoid

split-brain scenarios and ensure a stable master election process.

Cluster Health and Stability: a. Cluster Monitoring:

- The master node plays a crucial role in monitoring the health and status of the cluster,

including the availability of data nodes, indices, and shards. b. Cluster State Recovery:

- In the case of failures or issues in the cluster, the master node is responsible for taking

corrective actions, such as reassigning shards or promoting replica shards to primaries to maintain cluster health.

In summary, the master node in Elasticsearch is a crucial component responsible for

overseeing cluster-wide operations, maintaining cluster health, and ensuring the proper

coordination and functioning of the Elasticsearch cluster. It plays a vital role in maintaining

the stability and reliability of the cluster's operations. Proper configuration and monitoring of

master-eligible nodes are essential for cluster resilience and optimal performance. 1.4.2. Data Node:

In Elasticsearch, a "data node" is a type of node in the cluster that primarily stores and

manages data. Data nodes are responsible for holding and indexing the actual data, and they

play a crucial role in the distributed storage and retrieval of information. Here's a detailed

explanation of data nodes in Elasticsearch: Role of Data Nodes: a. Data Storage:

- Data nodes are responsible for storing the actual data, including the documents and their

associated inverted indexes, which enable fast search and retrieval. b. Indexing Documents:

- When you index new documents into Elasticsearch, data nodes handle the storage and

indexing process. These documents are divided into smaller units called shards, and the data

nodes determine how to distribute these shards across the cluster. c. Search Operations:

- Data nodes participate in query and search operations. They perform the actual search

across the indexed data and return the relevant results to the coordinating node (or directly to

the client if there is no coordinating node involved). d. Data Retrieval:

- When a search request is issued, data nodes retrieve and process the data from their local

storage, contributing to the speed and efficiency of search operations.

Configuration of Data Nodes: 17 a. Node Configuration:

- In Elasticsearch, you can configure a node to serve as a data node by adjusting its settings

in the `elasticsearch.yml` configuration file. A typical configuration for a data node might look like this: ```yaml

node.master: false # This node is not a master node

node.data: true # This node is a data node ```

- The `node.master` and `node.data` settings determine whether a node can be elected as a

master node or function as a data node. b. Dedicated Data Nodes:

- In larger Elasticsearch clusters, it's common to have dedicated data nodes that exclusively

handle data storage and retrieval. These nodes do not participate in cluster-wide management tasks.

Data Distribution and Shards: 1. Shard Allocation:

- Data nodes are responsible for holding primary shards and their corresponding replica

shards. Elasticsearch's shard allocation process ensures that these shards are distributed across

data nodes for fault tolerance and load balancing. 2. Data Redundancy:

- By having multiple copies of data (primary and replica shards), data nodes provide data

redundancy. If a data node fails, replica shards can be promoted to primary, ensuring data availability.

Scalability and Performance: 1. Horizontal Scalability:

- Elasticsearch clusters can be scaled horizontally by adding more data nodes. This enables

you to store and retrieve data from larger datasets and handle higher query loads. 2. Parallel Processing:

- Data nodes enable parallel processing of search queries, contributing to faster search

responses and improved performance.

In summary, data nodes in Elasticsearch are essential components responsible for storing,

indexing, and retrieving data. They play a central role in the distributed architecture of

Elasticsearch, enabling horizontal scalability, data redundancy, and efficient search and

retrieval operations. Properly configuring and managing data nodes is crucial for optimizing

Elasticsearch cluster performance and reliability..

1.4.3. Data Nodes vs. Master Nodes 1. Dedicated Master Nodes:

- In larger Elasticsearch clusters, it's common to have dedicated master nodes that do not

store data but focus solely on cluster management and coordination. 18 2. Master-Data Nodes:

- In smaller clusters or single-node setups, it's possible to configure nodes to serve as both

master nodes and data nodes to simplify resource usage and management.

1.5. Indexing and Querying Process: 1.5.1. Indexing:

Indexing in Elasticsearch is the process of adding, updating, or removing documents

(data) within an Elasticsearch index. An index is a logical container for data, and it is

divided into shards for efficient storage and retrieval. Here's how the indexing process works in Elasticsearch: 1. Create an Index:

- Before you can index data, you need to create an Elasticsearch index to define the

structure and settings for the data you want to store. You specify the number of

primary shards and replica shards for the index. 2. Document:

- A document in Elasticsearch is the basic unit of information. It is usually

represented in JSON format and can contain various fields with data. 3. Document ID:

- Each document in an index is uniquely identified by a document ID. You can

provide your own custom document ID or let Elasticsearch automatically generate one. 4. Indexing a Document:

- When you want to store a document in Elasticsearch, you send an HTTP request to

the Elasticsearch cluster with the JSON document data. Here's a simplified example

using the `curl` command-line tool: ```bash

curl -X POST "http://localhost:9200/my-index/_doc/1" -H "Content-Type: application/json" -d '{ "field1": "value1", "field2": "value2" }' ```

- In this example, we are sending a JSON document to the "my-index" index with a custom document ID of "1." 5. Routing and Shards:

- Elasticsearch uses routing to determine which shard within the index will store the

document. This routing is usually based on the document ID or a routing key. 6. Shard Storage: 19

- The document is stored in the appropriate primary shard. If replication is

configured, a copy of the document is also stored in the replica shard for redundancy. 7. Data Distribution:

- Elasticsearch distributes documents across primary and replica shards, ensuring

even data distribution across the cluster. 8. Retrieval and Searching:

- After indexing, you can search for documents within the index using search

queries. Elasticsearch's powerful search capabilities allow you to retrieve documents based on various criteria. 9. Updates and Deletions:

- You can update existing documents or delete them by sending the appropriate

HTTP requests with the document ID. 10. Index Optimization:

- Elasticsearch periodically optimizes the index for efficient storage and retrieval.

This process includes merging segments and pruning deleted documents. 11. Bulk Indexing:

- For efficiency, you can index multiple documents in a single request using bulk

indexing. This reduces overhead and improves indexing performance. 12. Real-time Indexing:

- Elasticsearch provides near real-time indexing, which means that documents are

searchable shortly after they are indexed. However, there might be a slight delay in

some cases due to indexing and refresh operations.

Indexing is a fundamental operation in Elasticsearch, enabling you to store, search,

and retrieve data efficiently. The process is designed to be scalable, fault-tolerant, and

suitable for a wide range of use cases, from simple document storage to complex data

analysis and search applications. 1.5.2. Querying:

Querying in Elasticsearch involves searching for and retrieving documents from an

Elasticsearch index based on specific criteria or search queries. Elasticsearch provides a

powerful and flexible query system that allows you to perform full-text searches, structured

queries, aggregations, and more. Here's an overview of how querying works in Elasticsearch: a. Creating a Query:

- To perform a query, you construct a query request specifying the search criteria and

conditions you want to apply to your data. b. Query DSL:

- Elasticsearch uses a Query DSL (Domain Specific Language) that allows you to express

complex queries. The DSL includes a wide range of query types and filter options to meet your specific needs. c. Types of Queries:

- Elasticsearch offers various types of queries, including: 20

- Full-text Queries: These are used for searching full-text content within documents.

Common options include match, match_phrase, and multi_match queries.

- Term-Level Queries: Used for exact matching of terms within documents. Examples

include term, terms, and prefix queries.

- Compound Queries: These queries combine multiple subqueries to create complex

conditions. Examples include bool and dis_max queries.

- Geo Queries: Used for location-based searches.

- Joining Queries: Useful for searching documents with parent-child relationships. d. Executing the Query:

- You send the query as an HTTP request to the Elasticsearch cluster, specifying the index

or indices you want to search within. ```bash

curl -X GET "http://localhost:9200/my-index/_search" -H "Content-Type: application/json" -d '{ "query": { "match": { "field1": "value" } } }' ```

In this example, we're using a simple match query to search documents in the "my-index" index. e. Query Execution:

- Elasticsearch processes the query and retrieves matching documents based on the criteria you specified. f. Search Results:

- The search results include the documents that match the query, along with metadata like

the relevance score and the matched fields. g. Pagination and Sorting:

- You can use pagination and sorting options to control the order of results and navigate through large result sets. h. Aggregations:

- Elasticsearch allows you to perform aggregations to analyze and summarize the data in

your search results. You can calculate statistics, create histograms, and generate various insights. i. Filtering and Scoring:

- You can apply filters to your queries to narrow down results. Scoring mechanisms allow

you to control the relevance of documents based on specific criteria. k. Highlighting:

- Elasticsearch supports highlighting to display matching text fragments within documents,

making it easier for users to identify why a document matched a query. 21