Preview text:

Microsoft Machine Learning & Data Science Summit

September 26 – 27 | Atlanta, GA BR008

Go Big (with Data Lake Architecture) or Go Home! Omid Afnan

Session objectives and key takeaways Objective:

Understand how the traditional data landscape is changing,

what opportunities big data presents and what architectures

allow you to maximize the benefits to your organization. Key Takeaways:

Data lake architectures can be additive to your data warehouse

Azure Data Lake makes building big data environments easy

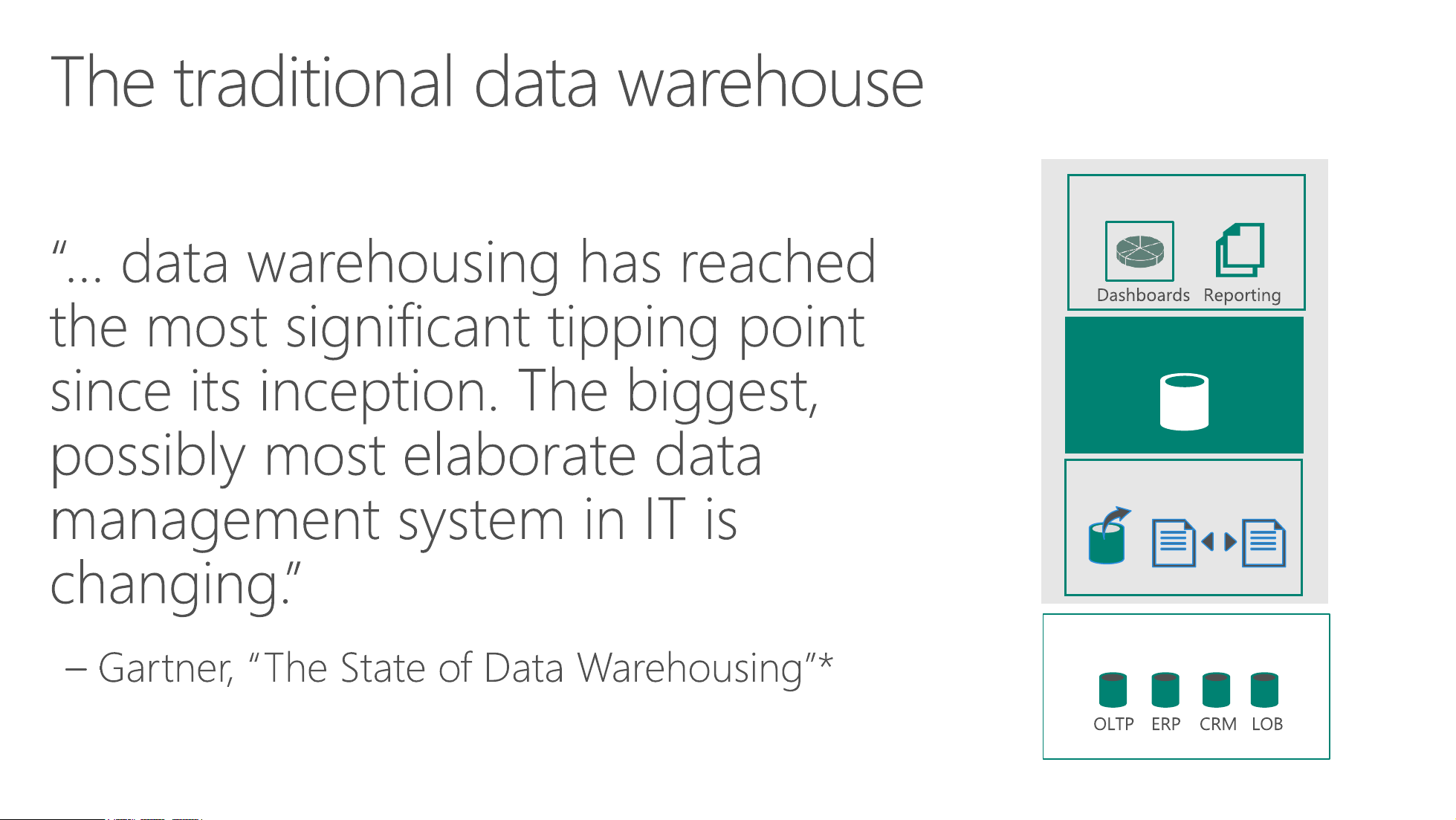

The traditional data warehouse BI and analytics

“… data warehousing has reached

the most significant tipping point Dashboards Reporting Data warehouse

since its inception. The biggest, possibly most elaborate data management system in IT is ETL changing.” Data sources

– Gartner, “The State of Data Warehousing”* OLTP ERP CRM LOB

* Donald Feinberg, Mark Beyer, Merv Adrian, Roxane Edjlali (Gartner), The State of Data Warehousing in 2012 (Stamford, CT.: Gartner, 2012) 4

Big Data is driving transformative changes

“Big data is high-volume, high-velocity and/or high-

variety information assets that demand cost-effective,

innovative forms of information processing that enable

enhanced insight, decision making, and process automation.”

– Gartner, Big Data Definition*

* Gartner, Big Data (Stamford, CT.: Gartner, 2016), URL: http://www.gartner.com/it-glossary/big-data/

Big Data is driving transformative changes Data Cost Culture Characteristics

Big Data is driving transformative changes Traditional Big Data Data Characteristics Relational All Data (with highly modeled schema) (with schema agility) Cost Expensive Commodity (storage and compute capacity) (storage and compute capacity) Culture Rear-view reporting Intelligent action (using relational algebra)

(using relational algebra AND ML,

graph, streaming, image processing) Example: Culture of experimentation

Tangerine instantly adapts to

customer feedback to offer customers

what they want, when they want it

Scenario Lack of insight for targeted campaigns

Inability to support data growth

Azure HDInsight (Hadoop-as-a-service) with the Analytics

Solution Platform System enables instant analysis of social sentiment

and customer feedback across digital, face-to-face and phone interactions.

• Reduced time to customer insight

• Ability to make changes to campaigns or adjust product Result

rollouts based on real-time customer reactions

“I can see us…creating predictive, context-aware financial

• Ability to offer incentives and new services to retain—and

services applications that give information based on the time grow—its customer base and where the customer is.” Billy Lo

Head of Enterprise Architecture Why Data Lakes?

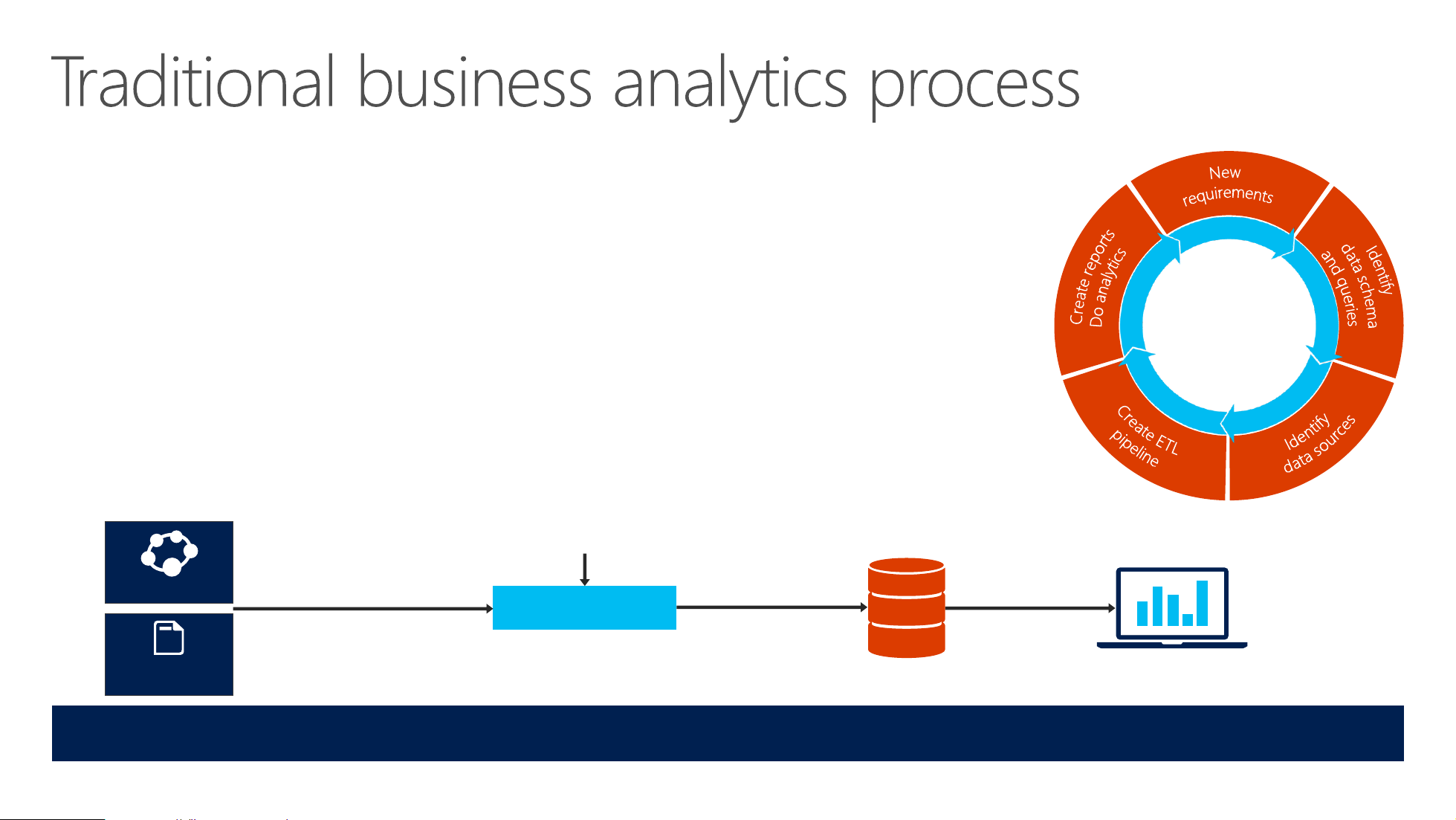

Traditional business analytics process

1. Start with end-user requirements to identify desired reports New requirements and analysis d I a a d n t e

2. Define corresponding database schema and queries d a n reports q s t c i u f h y e e analytics ri m

3. Identify the required data sources e o s a Create D

4. Create a Extract-Transform-Load (ETL) pipeline to extract

required data (curation) and transform it to target schema (‘schema- Crea on-write’) p te ip E e T l L Identify ine data sources

5. Create reports, analyze data

Dedicated ETL tools (e.g. SSIS) Relational ETL pipeline Queries LOB Applications Defined schema Results

All data not immediately required is discarded or archived 10

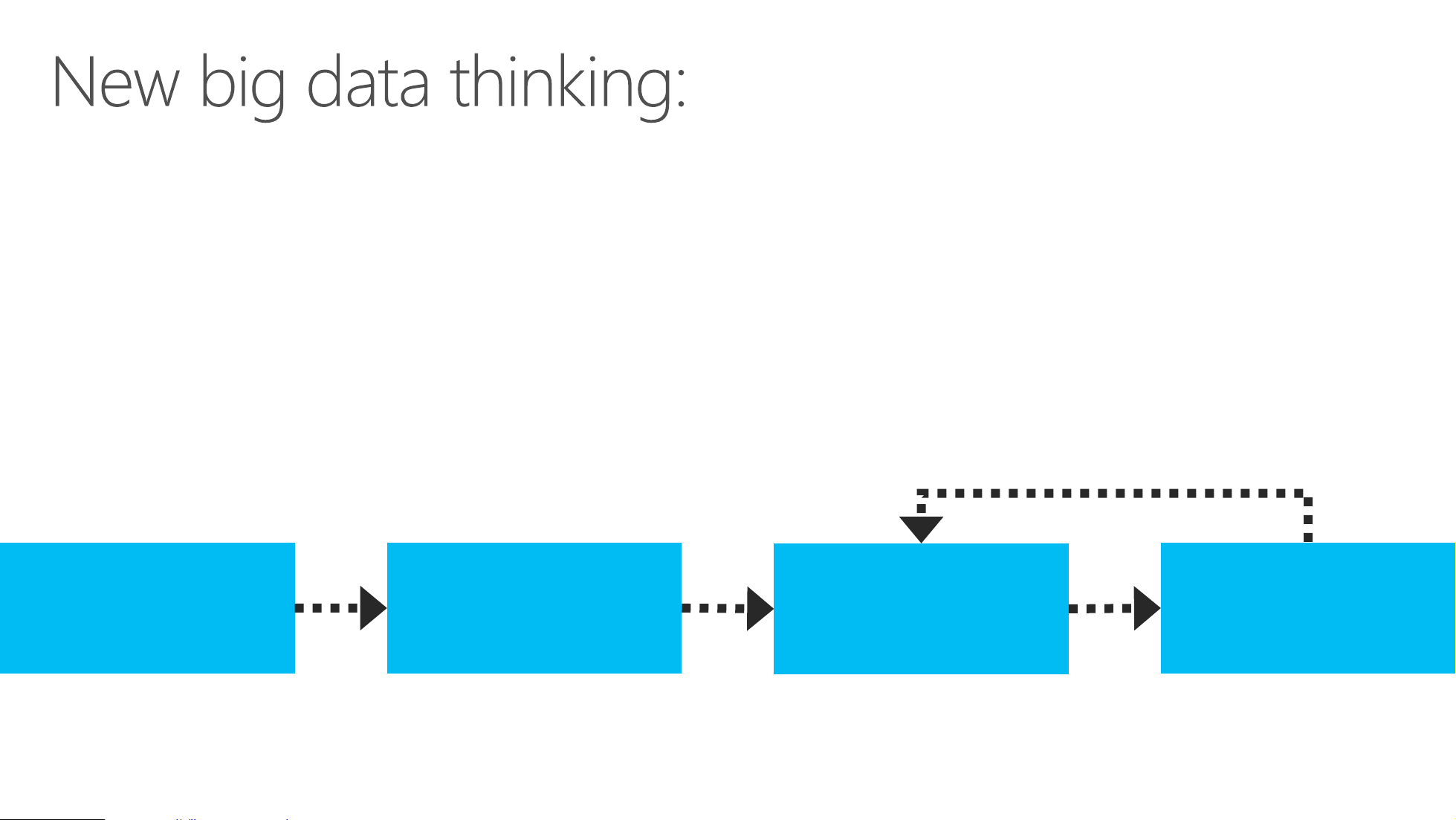

New big data thinking: Al data has value

• All data has potential value • Data hoarding

• No defined schema—stored in native format

• Schema is imposed and transformations are done at query time (schema-on-read).

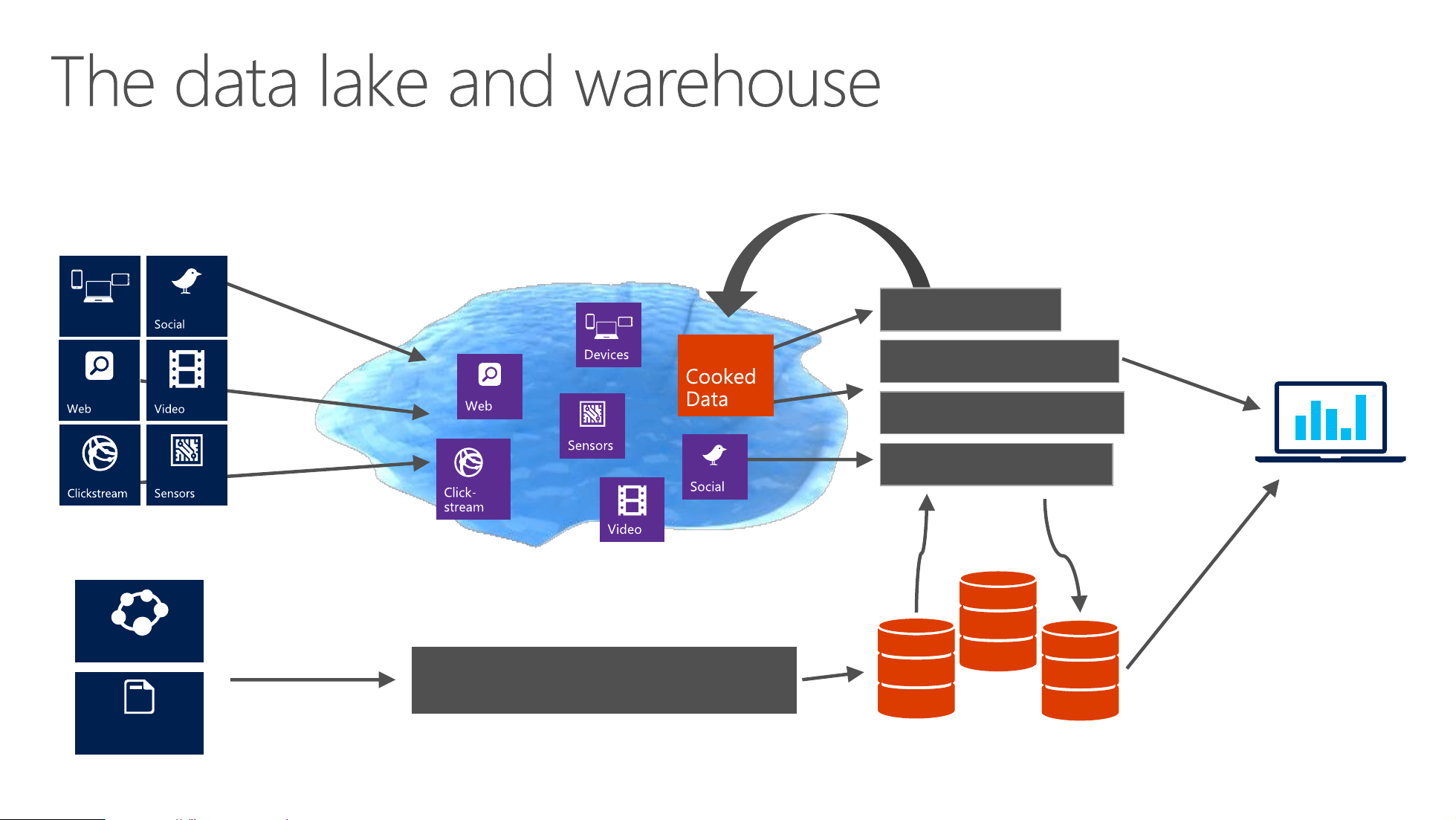

• Apps and users interpret the data as they see fit Iterate Gather data Store indefinitely Analyze See results from all sources 11 The data lake and warehouse Batch queries Dashboards Devices Social Reports Devices Interactive queries Exploration Cooked Data Web Video Web Real-time analytics Sensors Machine Learning Queries Social Clickstream Sensors Click- stream Video Meta-Data, Cooked Joins Data Relational Results ETL pipeline LOB Applications Defined schema

However, Big Data is not easy… Obtaining skills Determining how Integrating with and capabilities to get value existing IT investments

*Gartner: Survey Analysis – Hadoop Adoption Drivers and Challenges (Stamford, CT.: Gartner, 2015)

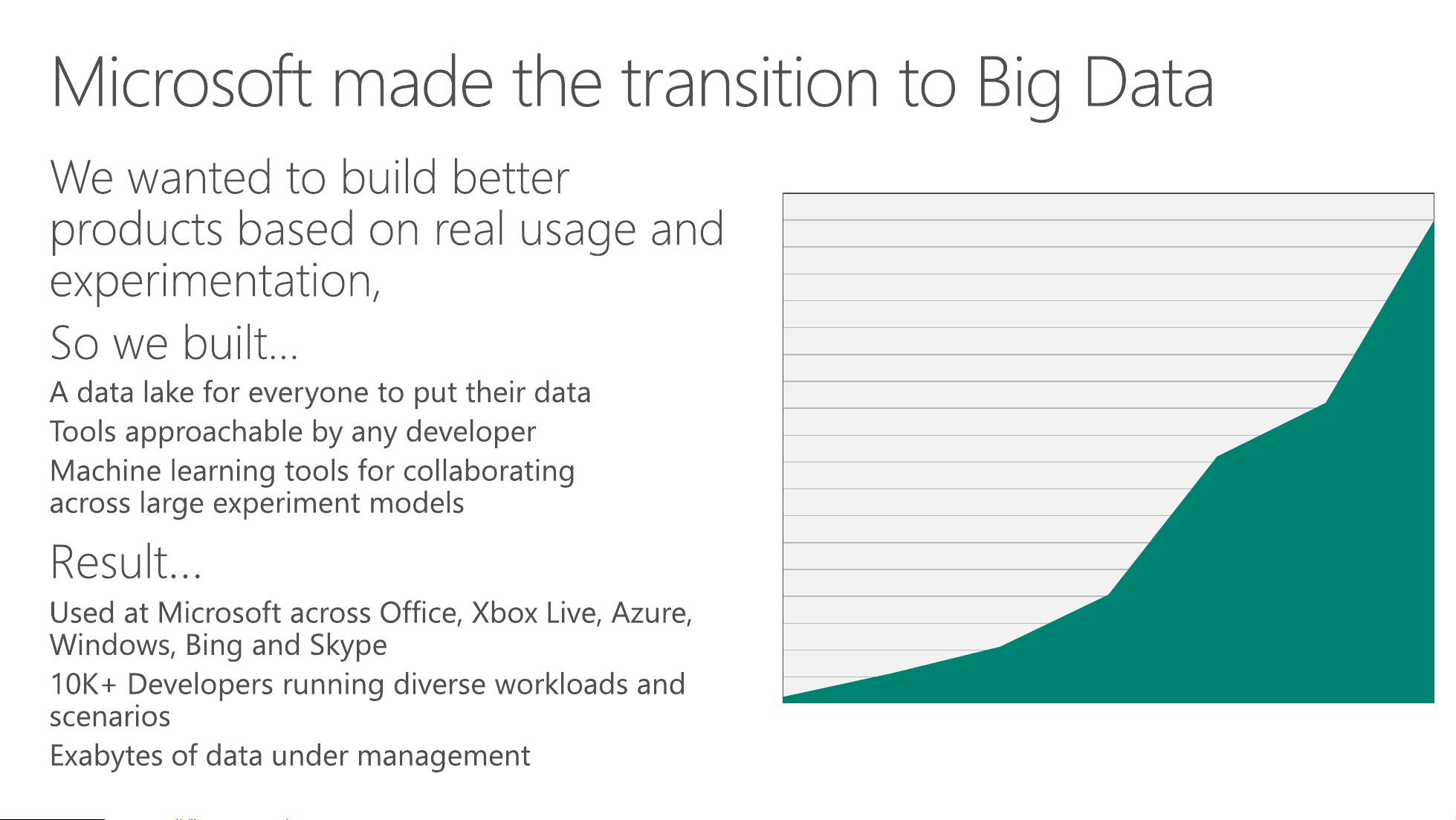

Microsoft made the transition to Big Data We wanted to build better Data Stored

products based on real usage and experimentation, So we built… Xbox Live

A data lake for everyone to put their data

Tools approachable by any developer Office365 LCA

Machine learning tools for collaborating Live across large experiment models Bing SMSG Yammer Result… CRM/Dynamics Skype

Used at Microsoft across Office, Xbox Live, Azure, Exchange Windows Windows, Bing and Skype Malware Protection Microsoft Stores

10K+ Developers running diverse workloads and Commerce Risk scenarios

Exabytes of data under management 1 2 3 4 5 6 7 Patterns for Big Data

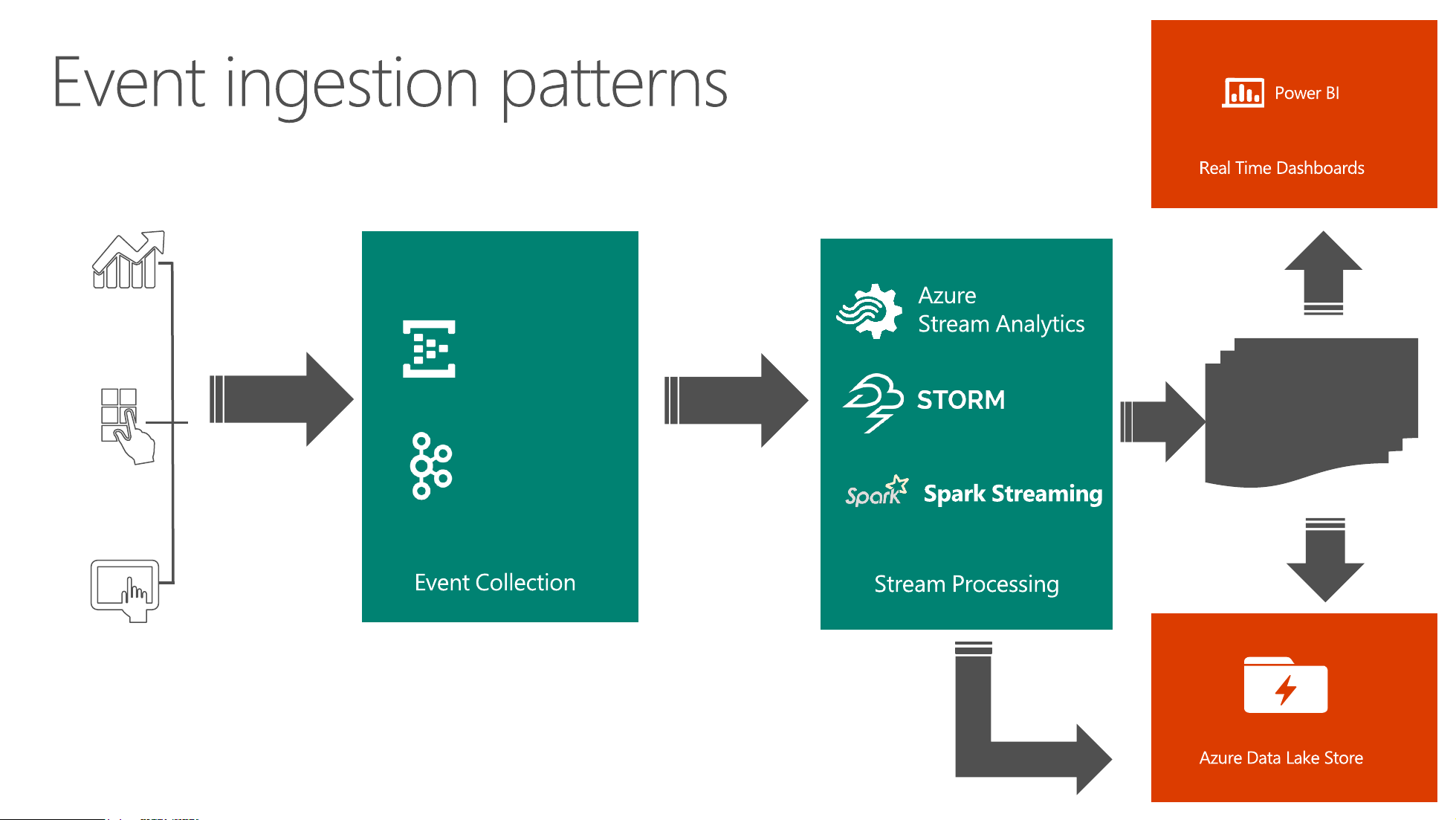

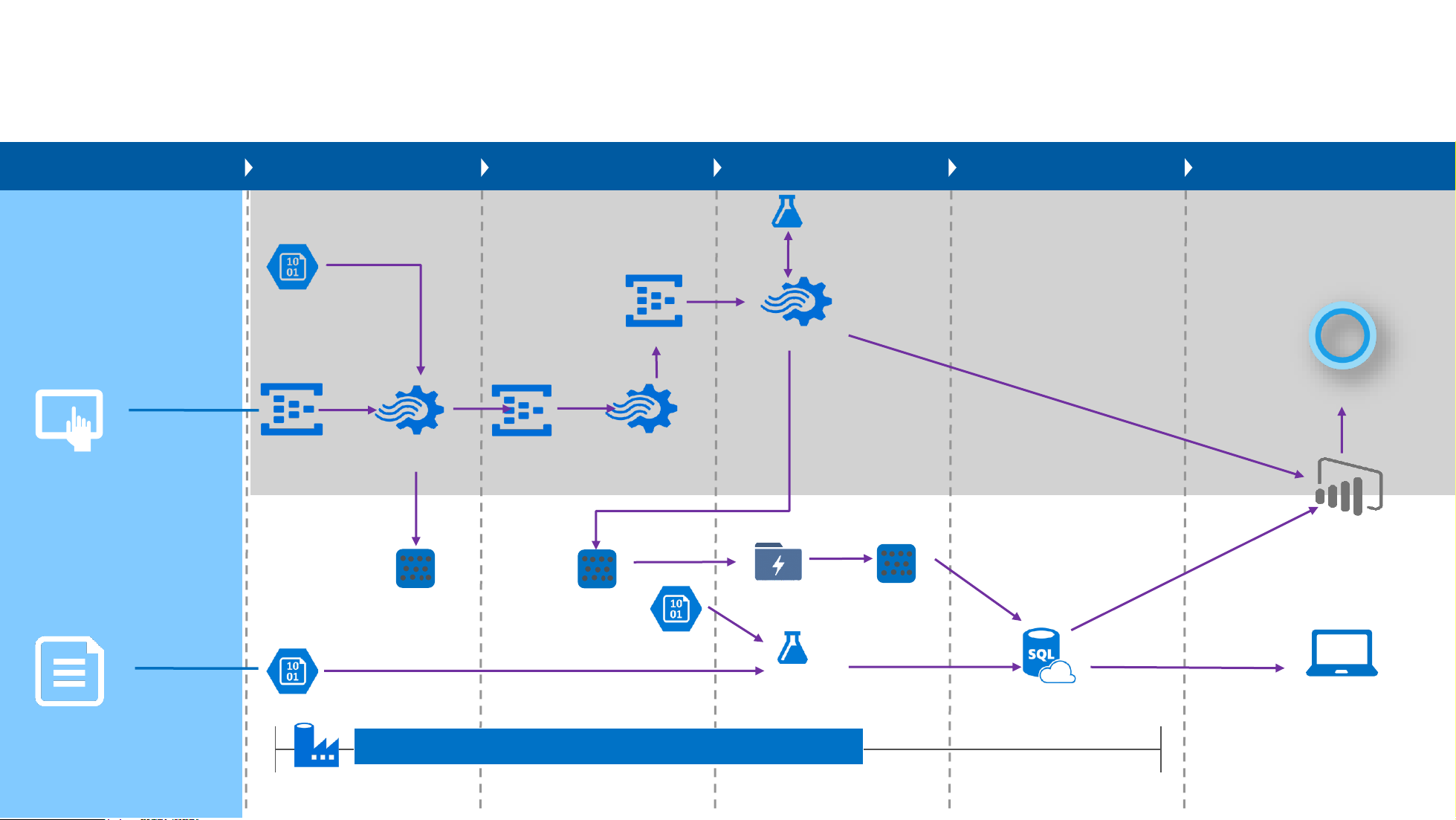

Big Data Analytics – Data Flow Ingestion Discovery Azure Data Catalog Business apps Preparation, Analytics and Bulk Ingestion Machine Learning Visualization People Power BI Custom apps Sensors Event Ingestion and devices Azure Data Lake Store DATA INTELLIGENCE ACTION Event ingestion patterns Power BI Real Time Dashboards Business Azure apps Azure Event Stream Analytics Hubs Events Events Transformed Data Kafka Custom apps Spark Streaming Event Col ection Stream Processing Sensors and devices Raw Events Azure Data Lake Store Lambda architecture DATA INGEST PREPARE ANALYZE PUBLISH CONSUME SOURCES Machine Learning Hot Path Real-time Scoring Reference Data Event hubs ASA Job Rule Cortana Event hubs Flatten & Event hubs Sensors (IoT, ASA Job Rule Metadata Join Devices, Mobile) Aggregated Hourly, Daily, Archived Data Data Monthly Roll-Ups Power BI Data Lake Data Lake Data Lake Data Lake Store Store Analytics Store Offline Training Machine Batch Scoring Learning Azure SQL Web/LOB Data Warehouse Dashboards Logs (CSV, JSON,

Data Factory: Move Data, Orchestrate, Schedule, and Monitor XML…) On Premise Cold Path Clickstream,

Leading Computer Manufacturer / Retailer Recommendation

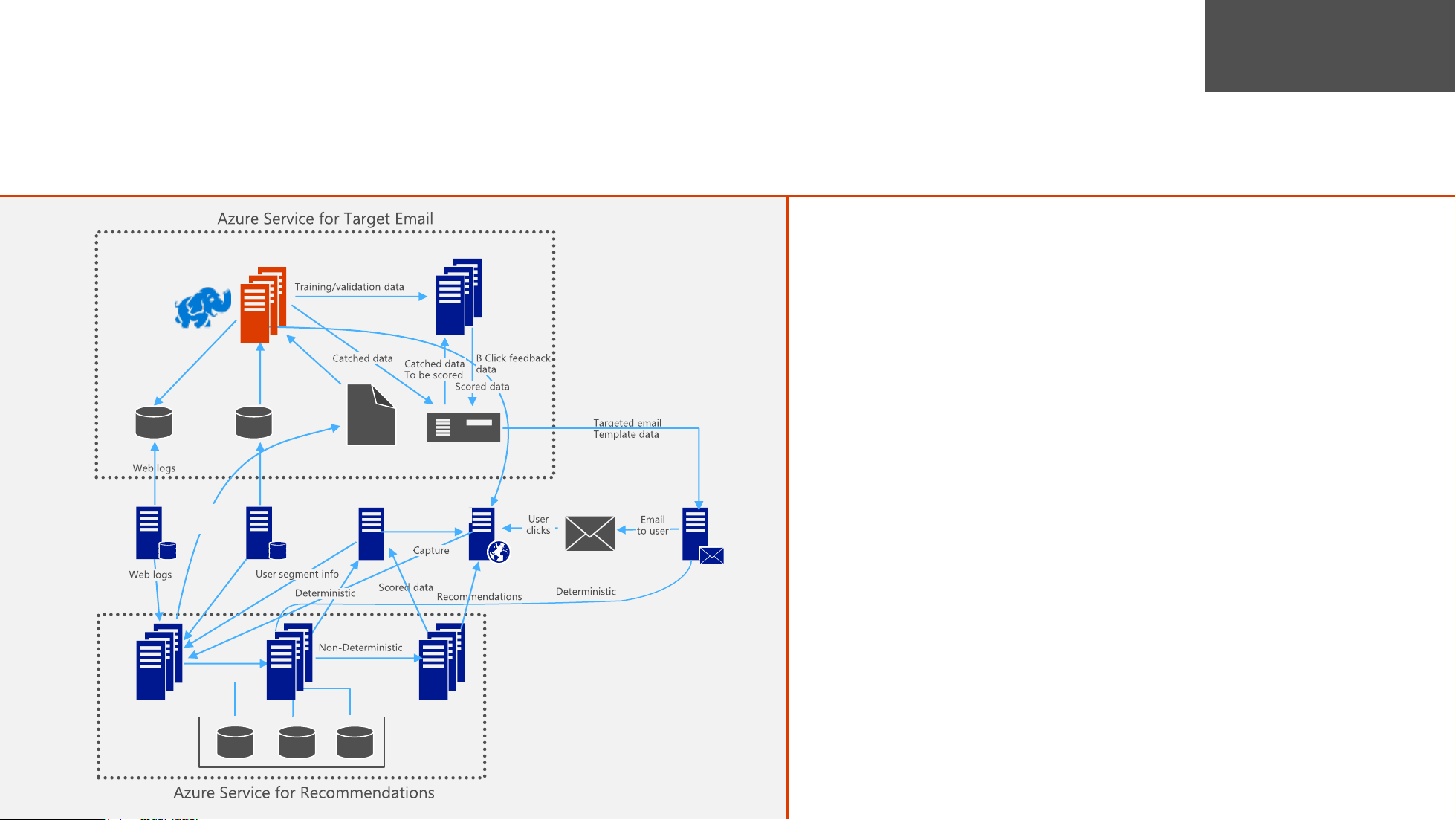

How They Did It: Analyzing Clickstream to Provide Real-time Recommendations Online Azure Service for Target Email HDInsight Cluster AzureML • How They Did It Training/validation data • Collect clickstream data

• In tab separated text files Catched data B Click feedback Catched data data To be scored

• Adding 22 new files per hour ~5-18 Scored data MB/file Blog Blog MB ase Targeted email Storage Storage Template data • Currently 1TB and growing IaaS VM Web logs Email Server • Spin up Hadoop Visitor Information Omniture Product Website.com Catalog Service User Email clicks to user

• Use Hive scripts because of SQL-like Capture Targeted Email syntax Web logs User segment info Scored data Deterministic Recommendations Deterministic

• Extracts click behavior like buys,

additions to carts, reviews etc. and Non-Deterministic assigns scores NRT • Jobs run hourly Event Hub AzureML Azure SQL Blog NoSQL Persisted

• Currently 8-nodes with plans to 16 DE Storage Storage Storage

BK1Azure Service for Recommendations Making Big Data Easy