Preview text:

lOMoAR cPSD| 23136115 Introduction to Data Mining

Lab 5: Putting it all together 5.1. The data mining process

In the fifth class, we are going to look at some more global issues about the data mining process. (See the

lecture of class 5 by Ian H. Witten, [1]1). We are going through four lessons: the data mining process, Pitfalls

and pratfalls, and data mining and ethics.

According to [1], the data mining process includes steps: ask a question, gather data, clean the data, define

new features, and deploy the result. Write down the brief for these steps: - Ask a question

Ask the right kind of question, such as "What do I want to know?".

This essential step provides the necessary framework for the subsequent stages of the data mining

process, ensuring a focused and goal-oriented approach. Omitting this step can lead to a lack of clarity and potential pitfalls. - Gather data

Obtain the required data to answer the research question and/or enrich existing datasets. While there is

a wealth of data available, challenges such as data quality, relevance, and quantity can limit its usefulness.

To optimize model performance, increasing the amount of data can be a more advantageous approach

than solely fine-tuning the algorithm, as the adage 'more data beats a clever algorithm' suggests. - Clean the data

Real-world data is often characterized by noise, missing values, and inconsistencies. To improve data

quality and facilitate accurate analysis, data preprocessing techniques, such as anomaly detection,

imputation, integration, normalization, and standardization, can be employed to clean and transform the data. - Define new features

1 http://www.cs.waikato.ac.nz/ml/weka/mooc/dataminingwithweka/ lOMoAR cPSD| 23136115

Create new attributes or features from the existing data that can provide additional insights and improve

model performance. This process, often referred to as feature engineering, involves transforming and

combining existing features to create more informative ones. - Deploy the result

Deploy the discovered knowledge or model into real-world applications or decision-making processes. This

involves sharing the results with relevant stakeholders and integrating them into business operations. And

here are the 7 steps of the KDD process according to Han and Kamber (2011): + Data Cleaning

Removing noise and inconsistent data to improve data quality. + Data Selection

Retrieving relevant data from the database for analysis. + Data Integration

Combining data from multiple sources into a coherent data store.

+ Data Transformation

Converting data into appropriate forms for mining, often involving normalization and aggregation. + Data Mining

Applying intelligent methods to extract data patterns. + Pattern Evaluation

Identifying truly interesting patterns in the data that represent valuable knowledge, using appropriate

interestingness measures to evaluate their significance.

+ Knowledge Representation

Presenting the mined knowledge in a clear, concise, and visually appealing format that is easily

understandable and actionable by the end-user. 5.2. Pitfalls and pratfalls

Follow the lecture in [1] to learn what are pitfalls and pratfalls in data mining.

Do experiments to investigate how OneR and J48 deal with missing values.

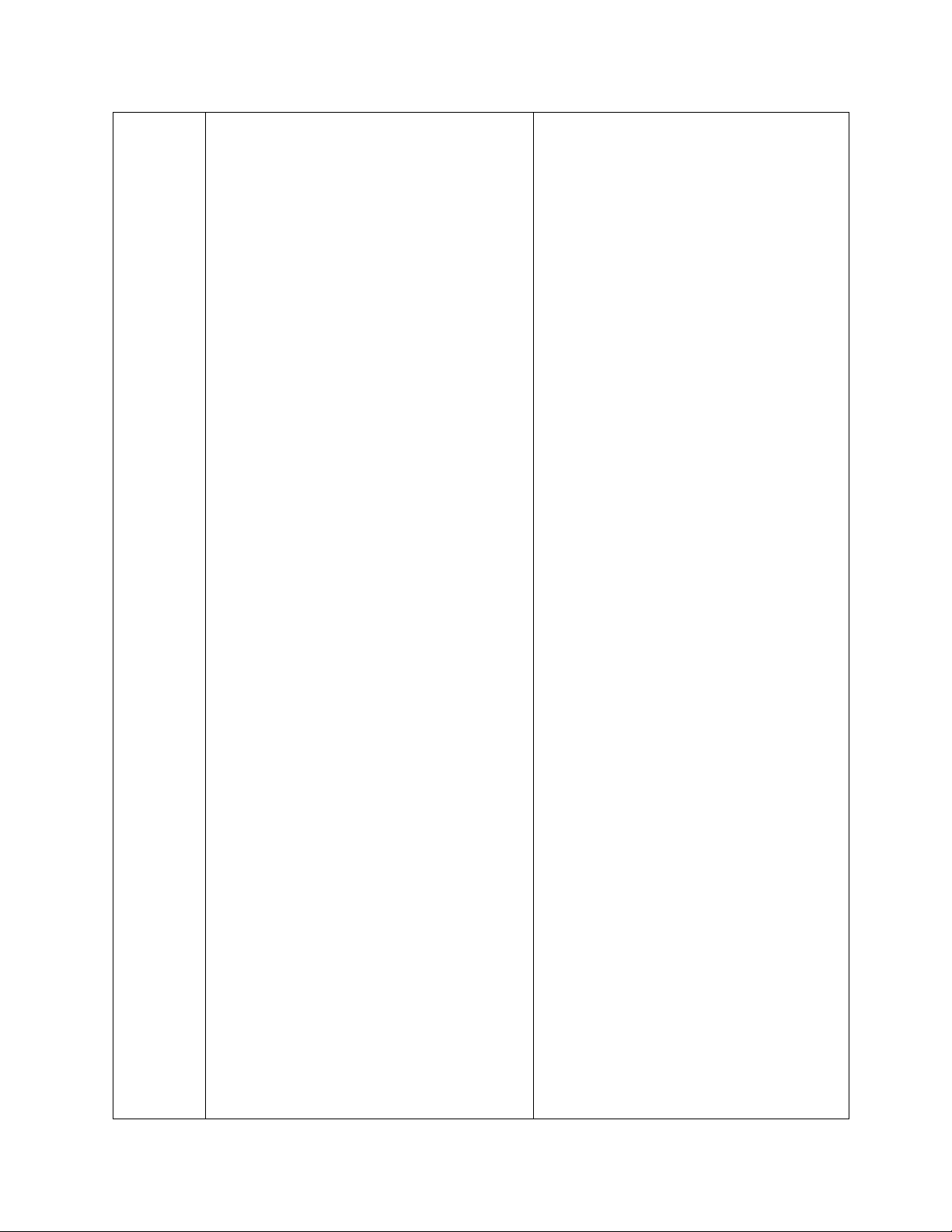

Write down the results in the following table: lOMoAR cPSD| 23136115 Dataset

OneR’s classifier model and J48’s

model and performance classifier performance lOMoAR cPSD| 23136115 weather Classifier Classifier nominal.arff(original)

=== Classifier model (full training === Classifier model (full training set) set) === === outlook: J48 pruned tree sunny -> no ------------------ overcast -> yes rainy -> yes outlook = sunny (10/14 instances correct) | humidity = high: no (3.0) | humidity = normal: yes (2.0) outlook = overcast: yes (4.0) outlook = rainy | windy = TRUE: no (2.0) | windy = FALSE: yes (3.0) Number of Leaves : 5 Size of the tree : 8 Performance Performance === 10-fold Stratified === 10-fold Stratified cross- crossvalidation === validation === === Summary === === Summary ===

Correctly Classified Instances 6 Correctly Classified Instances 7 42.8571 % 50 %

Incorrectly Classified Instances

Incorrectly Classified Instances 7 8 57.1429 % 50 %

Kappa statistic -0.1429 Kappa statistic -0.0426 Mean absolute error Mean absolute error 0.5714 0.4167

Root mean squared error Root mean squared error 0.7559 0.5984 Relative absolute error 120 Relative absolute error 87.5 % % Root relative squared error Root relative squared error 153.2194 % 121.2987 % Total Number of Instances Total Number of Instances 14 14

=== Detailed Accuracy By Class ===

=== Detailed Accuracy By Class === TP Rate FP Rate Precision TP Rate FP Rate Precision Recall F-Measure MCC ROC Area Recall F-Measure MCC ROC PRC Area Class Area PRC Area Class 0.556 0.600 0.625 0.444 0.600 0.571 0.556 0.588 -0.043 0.633 lOMoAR cPSD| 23136115

0.444 0.500 -0.149 0.422 0.611 0.758 yes yes 0.400 0.444 0.333 lOMoAR cPSD| 23136115 0.400 0.556 0.286

0.400 0.364 -0.043 0.633 0.457

0.400 0.333 -0.149 0.422 0.329 no no Weighted Avg. 0.500 0.544 Weighted Avg. 0.429 0.584 0.521 0.500 0.508 -0.043 0.469 0.429 0.440 -0.149 0.633 0.650 0.422 0.510 === Confusion Matrix === === Confusion Matrix === a b <-- classified as a b <-- classified as 5 4 | a = yes 4 5 | a = yes 3 2 | b = no 3 2 | b = no lOMoAR cPSD| 23136115 weather Classifier Classifier nominal.arff(with

=== Classifier model (full training === Classifier model (full training set) missing values) set) === === outlook: J48 pruned tree sunny -> yes -----------------: overcast -> yes yes (14.0/5.0) rainy -> yes ? -> no Number of Leaves : 1 (13/14 instances correct) Size of the tree : 1 Performance Performance === 10-fold Stratified === 10-fold Stratified cross- crossvalidation === validation === === Summary === === Summary ===

Correctly Classified Instances Correctly Classified Instances 7 13 92.8571 % 50 %

Incorrectly Classified Instances

Incorrectly Classified Instances 7 1 7.1429 % 50 % Kappa statistic 0.8372 Kappa statistic -0.1395 Mean absolute error Mean absolute error 0.0714 0.5403

Root mean squared error Root mean squared error 0.2673 0.5727 Relative absolute error 15 Relative absolute error % 113.4615 %

Root relative squared error 54.1712 Root relative squared error % 116.0707 % Total Number of Instances Total Number of Instances 14 14

=== Detailed Accuracy By Class ===

=== Detailed Accuracy By Class === TP Rate FP Rate Precision TP Rate FP Rate Precision Recall F-Measure MCC ROC Area lOMoAR cPSD| 23136115 Recall F-Measure MCC ROC PRC Area Class Area PRC Area Class 0.667 0.800 0.600 1.000 0.200 0.900 0.667 0.632 -0.141 0.211

1.000 0.947 0.849 0.900 0.900 0.545 yes yes 0.200 0.333 0.250 0.800 0.000 1.000

0.200 0.222 -0.141 0.211 0.306

0.800 0.889 0.849 0.900 0.871 no no Weighted Avg. 0.500 0.633 Weighted Avg. 0.929 0.129 0.475 0.500 0.485 -0.141 0.936 0.929 0.926 0.849 0.211 0.460 0.900 0.890 === Confusion Matrix === === Confusion Matrix === a b <-- classified as a b <-- classified as 6 3 | a = yes 9 0 | a = yes 4 1 | b = no 1 4 | b = no

Remark: how do OneR and J48 deal with missing values?

- OneR: The mere fact that a value is missing can be as important as the value itself, leading to substantial changes in the final result

- J48: Even though some values were missing, the overall results remained unaffected. 5.3. Data mining and ethics Reading 5.4. Association-rule learners

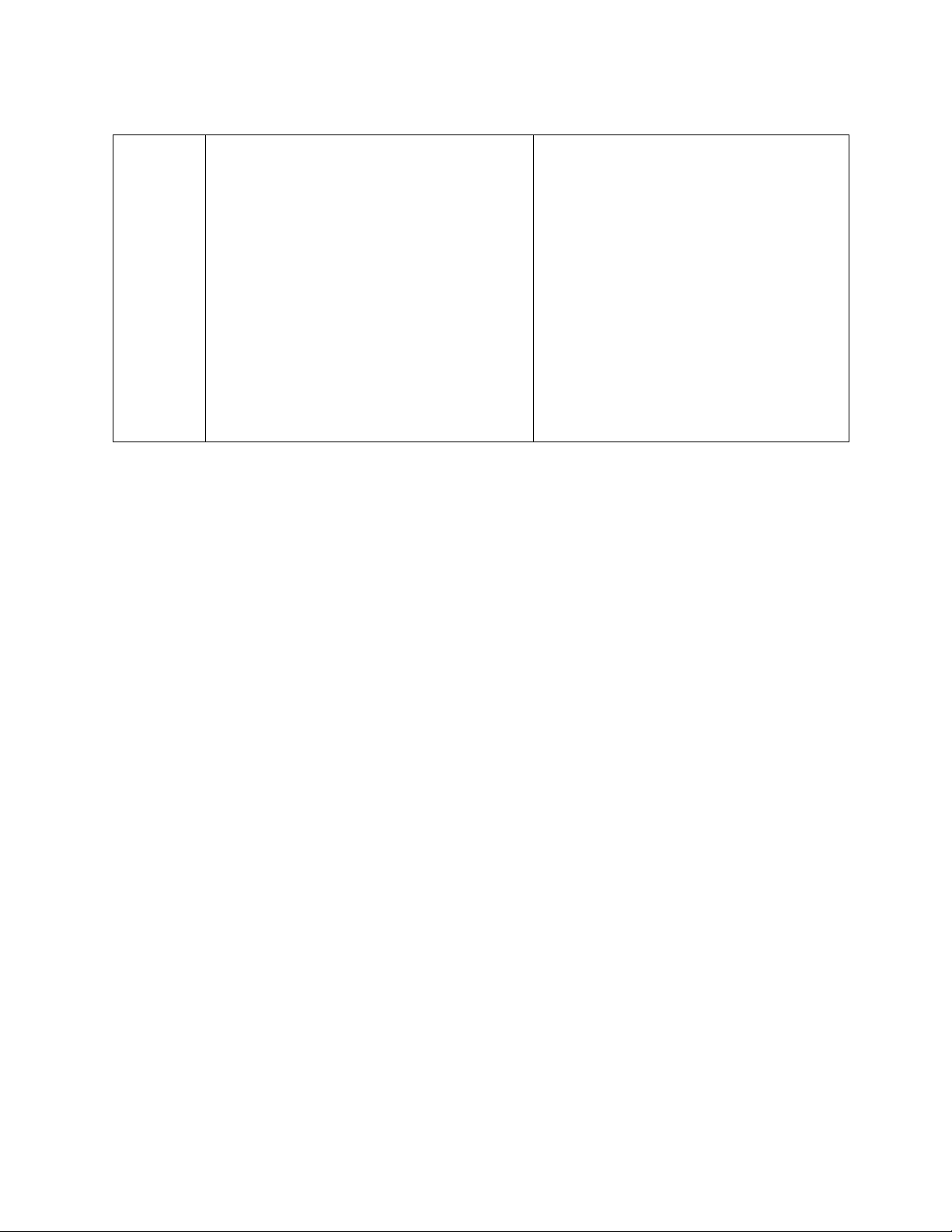

Do experiments to investigate how Apriori and FP-Growth generate association rules for datasets vote.arff Dataset

Apriori based association rules

FP-Growth based association rules Vote.arff Apriori === Run information === =======

Scheme: weka.associations.FPGrowth P

Minimum support: 0.45 (196 instances)

2 -I -1 -N 10 -T 0 -C 0.9 -D 0.05 -U 1.0 -M Minimum metric : 0.9 0.1

Number of cycles performed: 11 Relation: vote Instances: 435 Attributes:

Generated sets of large itemsets: 17 handicapped-infants lOMoAR cPSD| 23136115

Size of set of large itemsets L(1): 20 water-project-cost-sharing

adoption-of-the-budget-resolution

Size of set of large itemsets L(2): 17 physician-fee-freeze el- salvador-aid

Size of set of large itemsets L(3): 6 religious-groups-in-schools

anti-satellite-test-ban aid-to-

Size of set of large itemsets L(4): 1 nicaraguan-contras mx-missile Best rules found: immigration synfuels-corporation-cutback 1. adoption-of-the-budget- education-spending

resolution=y physician-fee-freeze=n 219 ==> superfund-right-to-sue

Class=democrat 219 lift:(1.63) crime lev:(0.19) [84] conv:(84.58) duty-free-exports 2. adoption-of-the-budget- export-administration-act-

resolution=y physician-fee-freeze=n aid-to- southafrica

nicaraguancontras=y 198 ==> Class

Class=democrat 198 lift:(1.63) lev:(0.18) [76] conv:

=== Associator model (full training set) === (76.47) 3. physician-fee-freeze=n aid-to-

FPGrowth found 41 rules (displaying top

nicaraguan-contras=y 211 ==> 10)

Class=democrat 210 lift:(1.62) lev:(0.19) [80] conv: 1. [el-salvador-aid=y, (40.74)

Class=republican]: 157 ==> [physician-fee- 4.

physician-fee-freeze=n education- freeze=y]: 156 lift:(2.44)

spending=n 202 ==> Class=democrat 201 lev:(0.21) conv:

lift:(1.62) lev:(0.18) [77] conv: (46.56) (39.01) 2.

[crime=y, Class=republican]: 158 5.

physician-fee-freeze=n 247 ==>

==> [physician-fee-freeze=y]: 155 Class=democrat 245 lift:

(0.98)> lift:(2.41) lev:(0.21) conv:(23.43)

(1.62) lev:(0.21) [93] conv:(31.8)

3. [religious-groups-in-schools=y, 6.

el-salvador-aid=n Class=democrat

physician-fee-freeze=y]: 160 ==>

200 ==> aid-to-nicaraguan-contras=y 197 [elsalvador-aid=y]: 156

lift:(1.77) lev:(0.2) [85] conv:

lift:(2) lev:(0.18) conv:(16.4) (22.18) 4.

[Class=republican]: 168 ==> 7.

el-salvador-aid=n 208 ==> aid-to- [physician-fee-freeze=y]: 163 nicaraguan-contras=y 204 lift:

lift:(1.76) lev:(0.2) [88] conv:(18.46)

(2.38) lev:(0.22) conv:(16.61) 8. adoption-of-the-budget- 5. [adoption-of-the-budget-

resolution=y aid-to-nicaraguan-contras=y

resolution=y, anti-satellite-test-ban=y, mx-

Class=democrat 203 ==> physician-fee-

missile=y]: 161 ==> [aid-to-nicaraguan-

freeze=n 198 contras=y]: 155 lift:(1.73)

(0.98)> lift:(1.72) lev:(0.19) [82] conv:(14.62) lev:(0.15) conv:(10.2) 9. el-salvador-aid=n aid-to- 6. [physician-fee-freeze=y,

nicaraguancontras=y 204 ==> Class=democrat 197 lOMoAR cPSD| 23136115

lift:(1.57) lev:(0.17) [71] conv:

Class=republican]: 163 ==> [el- (9.85) salvadoraid=y]: 156

10. aid-to-nicaraguan-contras=y lift:(1.96) lev:

Class=democrat 218 ==> physician- (0.18) conv:(10.45)

feefreeze=n 210 lift:(1.7) lev: 7.

[religious-groups-in-schools=y, el-

salvador-aid=y, superfund-right-to-sue=y]: lOMoAR cPSD| 23136115 (0.2) [86] conv:(10.47)

160 ==> [crime=y]: 153 lift: (1.68) lev:(0.14) conv:(8.6) 8. [el-salvador-aid=y, superfund-

right-to-sue=y]: 170 ==> [crime=y]: 162 lift:(1.67) lev:(0.15) conv:(8.12) 9.

[crime=y, physician-fee-freeze=y]:

168 ==> [el-salvador-aid=y]: 160 lift:(1.95) lev:(0.18) conv:(9.57) 10. [el-salvador-aid=y, physician-

feefreeze=y]: 168 ==> [crime=y]: 160 lift:(1.67) lev:(0.15) conv:(8.02)